Simulating 500 million years of evolution with a language model

Revolutionary protein LLM that combines sequence, structure, function in one generative model. Combining function and structure into this same model is a similar acheivement to the latest LLM multimodal models that incorporate text and images into a single multimodal model.

Works remarkably well at all scales with the 98B model acheiving truly impressive results purely from sequence.

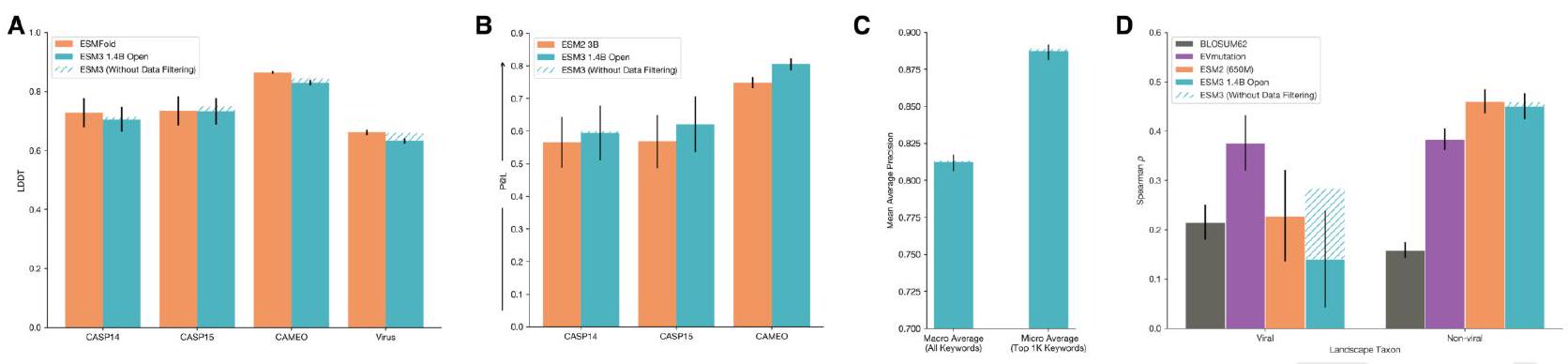

Performs less well than alphafold2. alphafold2 performs significantly worse than alphafold3. In addition recent work 10.1038/s41592-023-02087-4 shows that AlphaFold predictions are valuable hypotheses and accelerate but do not replace experimental structure determination.

After being laid off from Facebook ex-meta meta-axed they started a new company EvolutionaryScale funding by Lux Capital, Nventures (Nvidia) and AwS.

ESM3 latest model from the Protein AI team formerly Facebook at now at EvolutionaryScale.

Allows for incorporation of sequence, structure and function in a masked transformer.

Three scales of model: 1.4 billion, 7 billion, and 98 billion parameters.

1.4 Billion parameter model released on github https://github.com/evolutionaryscale/esm

Left Facebook and becoming Evolutionary Scale. $142 million in seed funding cen.acs.org

ESMFold performed significantly worse than Alphafold2 and other competitors in CASP15. https://predictioncenter.org/casp15/zscores_final.cgi

Alphafold3 is now released. Results in paper show Alphafold2 numbers. Alphafold3 is substantially more accurate than Alphafold2. 10.1038/s41586-024-07487-w

CASP16 is underway right now, finishing soon. Interesting time to release this paper as a preprint and then to bioarxiv.

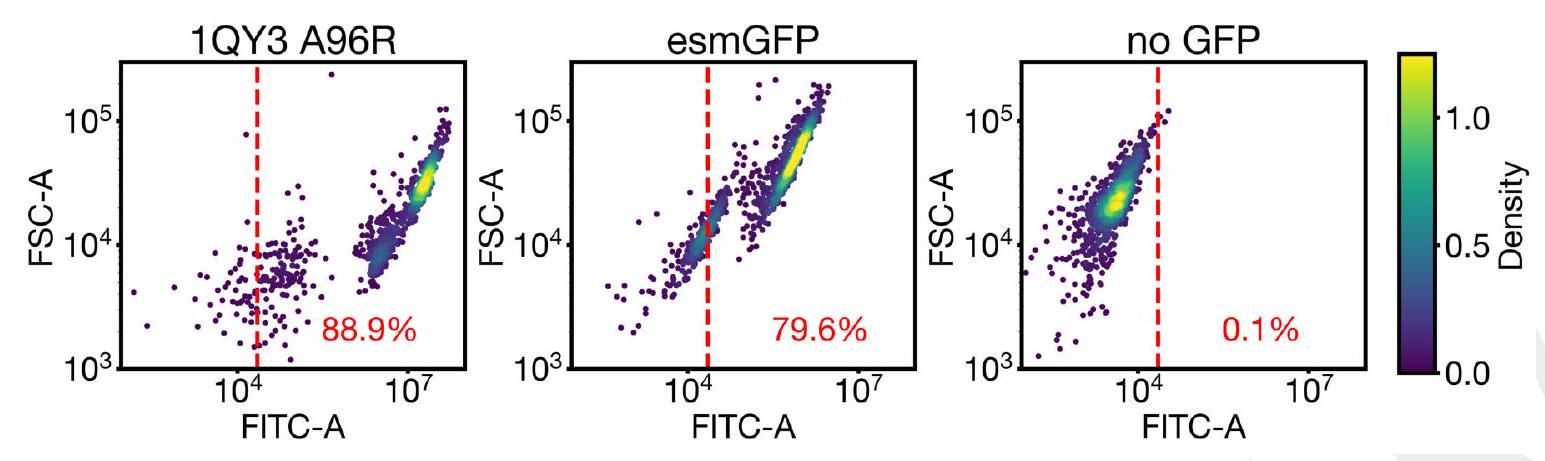

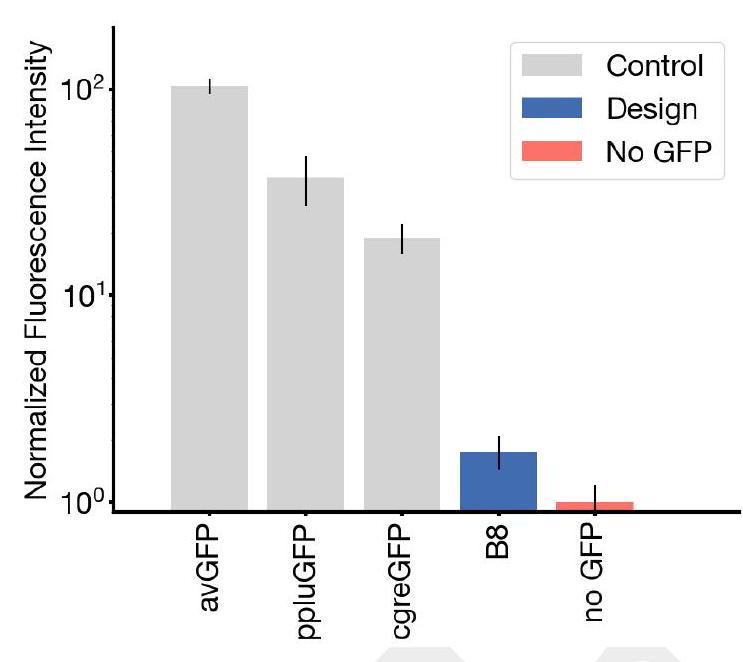

Experiment using GFP as a self-reporter is creative and elegent, but unorthodox. Some of their designed fluorophores take days to develop.

Total cost of training model is estimated at approximatly $12M. Total seed funding is $140M. Estimate for total required is $268 ex-meta.

The company aims to establish revenue streams through partnerships, usage fees, and revenue sharing agreements, with potential collaborations with pharmaceutical companies and researchers to incorporate ESM3 into their workflows.

"an effort that may span a decade" ex-meta

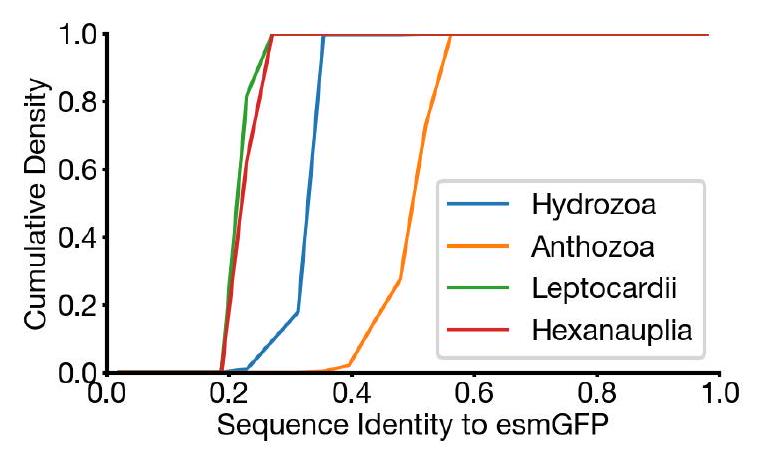

Their experiment basically ensures that they find a fairly distant relative of GFP if it exists, and it does exist and is called tagRFP, an existing Red Fluorescent Protein from another organism that. tagRFP has a similar fold to esmGFP but makes a different flurophore.

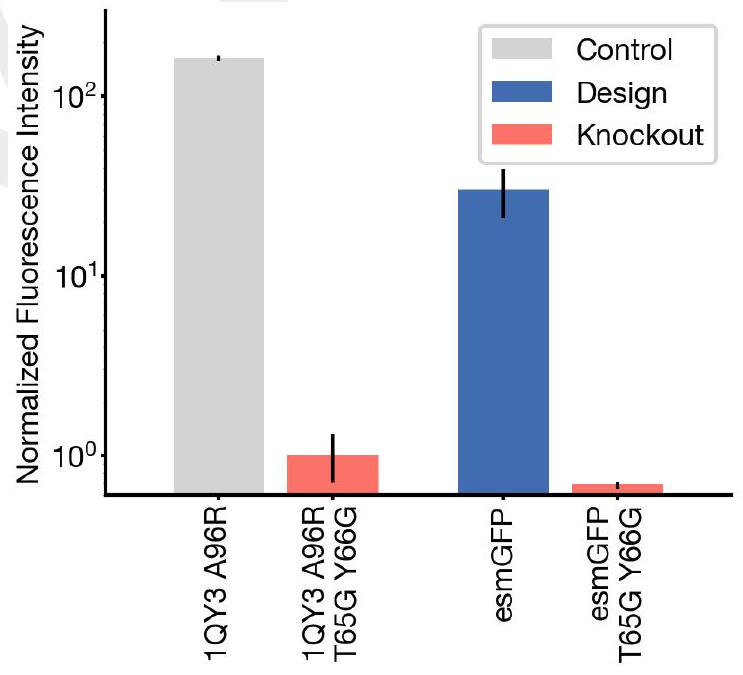

They then did a second experiment to improve the functioning of this GFP and recovered the function.

https://www.sciencedirect.com/science/article/pii/S0959440X23000684

OmegaFold [43] and ESMfold [44] are two implementations that seem similar to AlphaFold but without using the MSA. However, whether the predictions using these models use a single sequence can be questioned. The performance is significantly worse for (orphan) proteins that do not have many homologs in the sequence databases, i.e. the language models appear to memorise the MSA. ESMfold is computationally efficient and has been used to predict the structure of all proteins from an extensive meta-genomics database [45]. At CASP15, these methods performed significantly worse than AlphaFold.

retrieved from: https://evolutionaryscale-public.s3.us-east-2.amazonaws.com/research/esm3.pdf

Preview 2024-06-25. Pending submission to bioRxiv. Copyright 2024 by the authors.

Alexander Rives - Evolutionary Scale Language Models

Alexander Rives - Evolutionary Scale Language Models

Great video by Alexander Rives, long and technical with lots of great information. Wonderful overview of the field of generative AI for proteins. Interesting aside in the questions at the end about the accessiblity of other researchers in academia competeing with companies like Meta and Google with their vast resources, where he mentions that he doesn't know how much the models cost to train.

Protein Language Models

Really nice background on protein LLMS from Rosetta Commons.

In this video, language models are broken down in broad strokes and are reimagined for protein modeling, wherein protein sequence patterns are identified and then used to predict new sequences following the learnable parameters. The video explores IGLM as a case study, covering generative language models, controllable generation of sequences, infilled protein libraries, likelihood estimations.

Efficient Evolution of Human Antibodies From General Protein Language Models and Sequence Info Alone

Brian Hie, Stanford & Meta AI

Abstract: Natural evolution must explore a vast landscape of possible sequences for desirable yet rare mutations, suggesting that learning from natural evolutionary strategies could accelerate artificial evolution. Here, we report that deep learning algorithms known as protein language models can evolve human antibodies with high efficiency, despite providing the models with no information about the target antigen, binding specificity, or protein structure, and also requiring no additional task-specific finetuning or supervision. We performed language-model-guided affinity maturation of seven diverse antibodies, screening 20 or fewer variants of each antibody across only two rounds of evolution. Our evolutionary campaigns improved the binding affinities of four clinically relevant antibodies up to 7-fold and three unmatured antibodies up to 160-fold across diverse viral antigens, with many designs also demonstrating improved thermostability and viral neutralization activity. Notably, our algorithm requires only a single wildtype sequence and computes recommended amino acid changes in less than a second. Moreover, the same models that improve antibody binding also guide efficient evolution across diverse protein families and selection pressures, indicating that these results generalize to many natural settings. Contrary to prevailing notions of evolution as difficult and resource-intensive, our results suggest that when constrained to a narrow manifold of evolutionary plausibility, evolution can become much easier, which we refer to as the “efficient manifold hypothesis.”

Meta ESM-2 Fold - AI faster than Alphafold 2

Fairly standard AI pumping video, this time on ESMfold.

https://www.youtube.com/embed/aPCqzrscY4w

Another AI pumping video for ESM. Fairly good.

The State of Protein Structure Prediction and Friends

The State of Protein Structure Prediction and Friends Mohammed AlQuraish (Columbia)

AlphaFold2 revolutionized structural biology by accurately predicting protein structures from sequence. Its implementation however (i) lacks the code and data required to train models for new tasks, such as predicting alternate protein conformations or antibody structures, (ii) is unoptimized for commercially available computing hardware, making large- scale prediction campaigns impractical, and (iii) remains poorly understood with respect to how training data and regimen influence accuracy. Here we report OpenFold, an optimized and trainable version of AlphaFold2. We train OpenFold from scratch and demonstrate that it fully reproduces AlphaFold2's accuracy. By analyzing OpenFold training, we find new relationships between data size/diversity and prediction accuracy and gain insights into how OpenFold learns to fold proteins during its training process.

EvolutionaryScale, a new startup founded by former Meta researchers who specialized in AI language modeling for biology, has successfully raised $40 million in seed financing, led by Lux Capital. The company, valued at $200 million, aims to advance the development of biological large language models (LLMs), with significant investments from AI investors Nat Friedman and Daniel Gross.

The core innovation backing EvolutionaryScale lies in its founders' prior work at Meta AI, where they developed a transformer-based model for predicting protein structures, analogous to AI models like OpenAI's GPT-4. This model has already contributed to creating a database containing 700 million potential 3D protein structures, a resource that holds promise for accelerating drug discovery, environmental cleanup efforts, and the synthesis of industrial chemicals by providing insights into the molecular architecture of proteins and their interactions.

EvolutionaryScale's strategy hinges on the "scaling hypothesis" for AI in biology, betting that enlarging the model's dataset and complexity will lead to breakthroughs similar to those seen in natural language processing around 2018. They project significant investments in computing power, forecasting expenditures of up to $278 million in the third year, with the goal of expanding their model to incorporate a wide array of biological data sources beyond protein structures.

Despite the competition from established players like DeepMind's AlphaFold and emerging startups aiming to leverage LLMs for biomedical applications, EvolutionaryScale aspires to differentiate itself by developing a versatile and general-purpose AI model for biology. This ambition is underscored by lofty goals, including the design of programmable cells for cancer treatment and molecular machines for environmental restoration.

Given the founders' background, the project is receiving notable attention in both the biotech and AI fields. However, the company is clear about the challenges ahead, emphasizing a long-term vision that might require a decade to realize fully, particularly in terms of producing usable products and therapies. This initiative represents a significant and bold investment in the intersection of AI and biotech.

https://colab.research.google.com/github/evolutionaryscale/esm/blob/main/examples/generate.ipynb

Nice Google Colab to demonstrate ESM. It has nice visualizations of the proteins, although the proteins should probably be superimposed, not side by side. This is a TODO(sness) item.

https://github.com/evolutionaryscale/esm

They have a really nice way to run ESM using HuggingFace, a pip package for esm and some nice sample code. I got this running, pretty easy to get going. Unfortunately only the 1.4 billion parameter model of course.

https://ca.finance.yahoo.com/news/evolutionaryscale-launches-esm3-milestone-ai-100000341.html

https://www.reddit.com/r/singularity/comments/1dole9a/esm3simulating500millionyearsofevolution/

https://www.php.cn/faq/1796510087.html

https://axios.com/2024/06/25/ai-biotech-generative-model-protein-design

https://evolutionaryscale-public.s3.us-east-2.amazonaws.com/research/esm3.pdf

https://www.nature.com/articles/s41592-023-01790-6

https://analyticsindiamag.com/protein-wars-its-esmfold-vs-alphafold/

https://salvatore-raieli.medium.com/metas-esmfold-the-rival-of-alpahfold2-2223b67f6021

GFP search term yields lots of ads with Google Search

We used esmfold in htgaa 2023. We did not use it in 2024 and just used Alphafold3. Alphafold3 is really awesome btw.

Here is a student that used it:

https://complex-bike-918.notion.site/Protein-Design-df2978ed760e4f368b0d236b40212b01

Aaron W Kollasch Adam Lerer Ada Shaw Alec Radford Alessandro Barbato Alethea Power Alexander Derry Alexander Rives Alex Nichol Alex Paino Alex Ray Amos Bairoch Andrew N Carr Andriy Kryshtafovych Ariel Herbert-Voss Bilal Piot Bob McGrew Brian Hie Brooke Chan Carolyn Kim Charlotta PI Schärfe Chengxin Zhang Chetan Mishra Chloe Hsu Chris Sander Christian J A Sigrist Christopher Hesse Clemens Winter Daniele Calandriello Daniel Guo Daniel Ritter Dan Jurafsky Dario Amodei Dario Behringer Daron M Standley Dave Cummings Davis Liang Debora S Marks Deniz Oktay Dinko Franceschi Douwe Kiela Edouard De Castro Edward J Hu Elizabeth Barnes Evan Morikawa Felipe Petroski Such Fotios Chantzis Frank J Poelwijk Gabriel Studer Girish Sastry Greg Brockman Gretchen Krueger Halil Akin Hansen Spinner Harri Edwards Heewoo Jun Heidy Khlaaf Henrique Ponde de Oliveira Pinto Igor Babuschkin Ilya Sutskever Irhum Shafkat Jacob Hilton James Melville Janani Durairaj Jan Leike Jared Kaplan Jason Liu Jerome Eberhardt Jerry Tworek Jie Tang John B Ingraham John Healy John Moult John Schulman Jonathan Deaton Jonathan Frazer Josef Perktold Josh Achiam Julian B Kinney Julian Salazar Jun Gong Jürgen Haas Katie Mayer Katrin Kirchhoff Kawin Ethayarajh Kazutaka Katoh Khaled Mostaguir Krzysztof Fidelis Leland McInnes Leo Gao Liam J. Bartie Lood van Niekerk Lorenzo Cerutti Lukasz Kaiser Lu Wang Maciej Antczak Mafalda Dias Marco Pagni Marius Wiggert Mark Chen Mark Rowland Marta Szachniuk Martino Bertoni Matthew Knight Matthias Plappert Maya Topf Michael Petrov Michael Springer Michal Valko Mikhail Pavlov Miles Brundage Mira Murati Mohammad Bavarian Mohammad Gheshlaghi Azar Nathan Rollins Neil Thomas Nicholas James Sofroniew Nicholas Joseph Nick Ryder Nicolas Hulo Nikita Smetanin Niklas Muennighoff Nikolas Tezak Ori Kabeli Pamela Mishkin Pascal Notin Patrick D. Hsu Peter L Freddolino Peter Welinder Petra S Langendijk-Genevaux Philippe Tillet Phillip Pham Phillip Wallis Qiming Yuan Rachael C Kretsch Rafal Gumienny Ramya Rangan Raul Puri Raul Santiago Molina Rémi Munos Rhiju Das Robert Verkuil Rohil Badkundri Rose Orenbuch Roshan Rao Ruben Weitzman Salvatore Candido Sam McCandlish Scott Gray Shantanu Jain Shean Wang Skipper Seabold Steffanie Paul Steven Roth Suchir Balaji Talley J Lambert Thomas A Hopf Thomas Hayes Toan Q Nguyen Tomasz Zok Tom Sercu Torsten Schwede Vedant Misra Vincent Quy Tran Virginie Bulliard Weizhu Chen William Hebgen Guss William Saunders Winnie Xu Wojciech Zaremba Xavier Robin Xi Zhang Yang Zhang Yarin Gal Yelong Shen Yousuf Khan Yuanzhi Li Yuri Burda Zeming Lin Zeyuan Allen-Zhu

Thomas Hayes 1 & Roshan Rao 1 & Halil Akin 1 & Nicholas James Sofroniew 1 & Deniz Oktay 1 & Zeming Lin 1 & Robert Verkuil 1 & Vincent Quy Tran 2 3 Jonath7an Deaton 1 Marius Wiggert 1 Rohil Badkundri 1 Irhum Shafkat 1 Jun Gong 1 Alexander Derry 1 Raul Santiago Molina 1 Neil Thomas 1 Yousuf Khan 4 Chetan Mishra 1 Carolyn Kim 1 Liam J. Bartie 2 Patrick D. Hsu 2 3 Tom Sercu 1 Salvatore Candido 1 Alexander Rives 1 †

1 EvolutionaryScale, PBC 2 Arc Institute 3 University of California, Berkeley 4 Work done during internship at EvolutionaryScale, PBC

†Correspondence to arives@evolutionaryscale.ai.

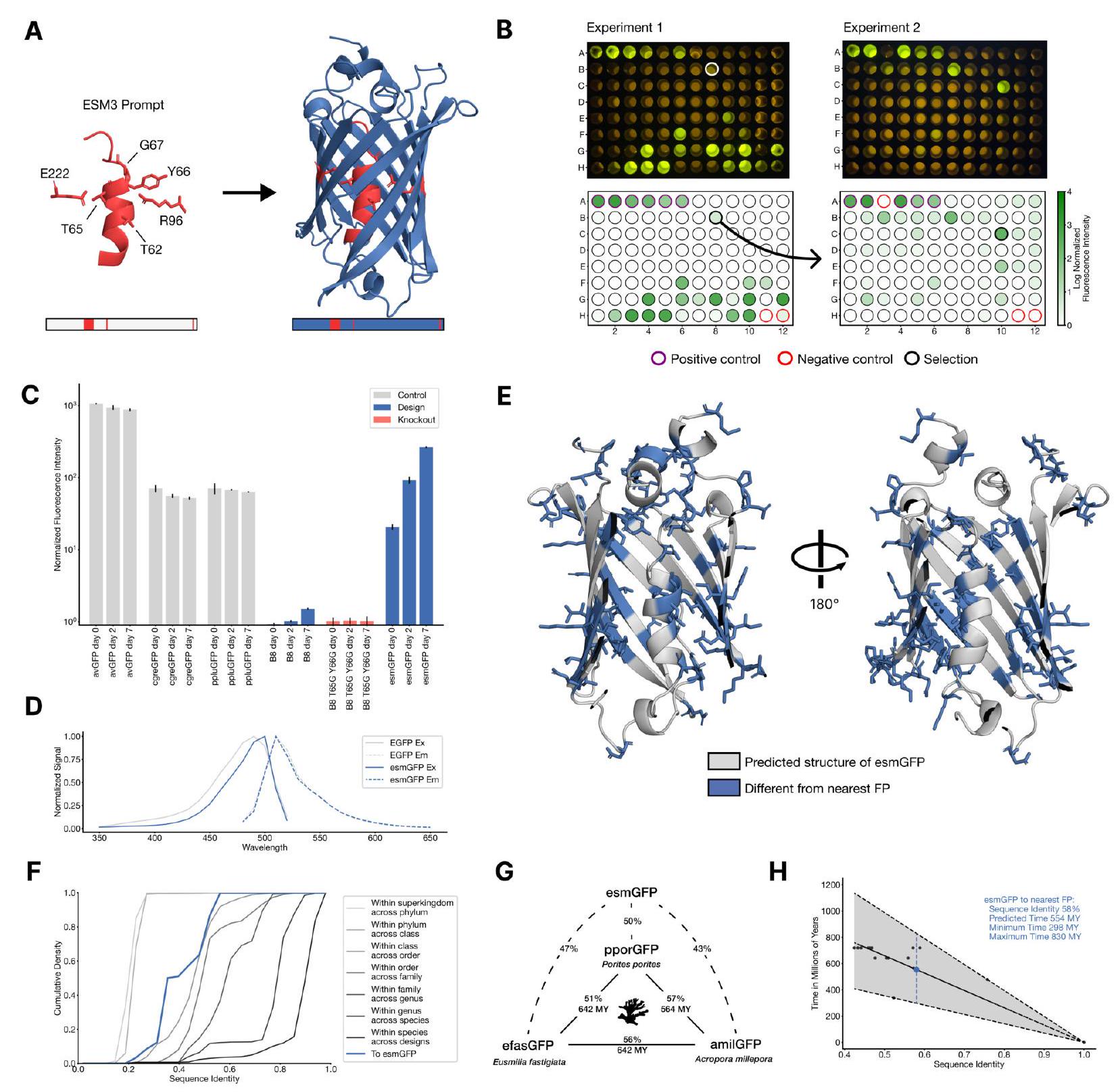

More than three billion years of evolution have produced an image of biology encoded into the space of natural proteins. Here we show that language models trained on tokens generated by evolution can act as evolutionary simulators to generate functional proteins that are far away from known proteins. We present ESM3, a frontier multimodal generative language model that reasons over the sequence, structure, and function of proteins. ESM3 can follow complex prompts combining its modalities and is highly responsive to biological alignment. We have prompted ESM3 to generate fluorescent proteins with a chain of thought. Among the generations that we synthesized, we found a bright fluorescent protein at far distance ( $58 \%$ identity) from known fluorescent proteins. Similarly distant natural fluorescent proteins are separated by over five hundred million years of evolution.

The proteins that exist today have developed into their present forms over the course of billions of years of natural evolution, passing through a vast evolutionary sieve. In parallel experiments conducted over geological time, nature creates random mutations and applies selection, filtering proteins by their myriad sequences, structures, and functions. Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - Proteins have developed into their current forms over billions of years.

As a result, the patterns in the proteins we observe reflect the action of the deep hidden variables of the biology that have shaped their evolution across time. Gene sequencing surveys of Earth's natural diversity are cataloging the sequences $(1-3)$ and structures $(4,5)$ of proteins, containing billions of sequences and hundreds of millions of structures that illuminate patterns of variation across life. A consensus is building that underlying these sequences is a fundamental language of protein biology that can be understood using large language models (6-10). Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - The observed patterns in proteins are shaped by the underlying biology that has evolved over time.

A number of language models of protein sequences have now been developed and evaluated ( $9,11-14$ ). It has been found that the representations that emerge within language models reflect the biological structure and function of proteins $(6,15,16)$, and are learned without any supervision on those properties, improving with scale $(5,17,18)$. In artificial intelligence, scaling laws have been found that predict the growth in capabilities with increasing scale, describing a frontier in compute, parameters and data (19-21). Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - A number of language models of protein sequences have been developed and evaluated.

We present ESM3, a frontier multimodal generative model, that reasons over the sequences, structures, and functions of proteins. ESM3 is trained as a generative masked language model over discrete tokens for each modality. Structural reasoning is achieved by encoding three-dimensional atomic structure as discrete tokens rather than with the complex architecture and diffusion in three-dimensional space employed in recent predictive (22) and generative models $(14,23-25)$ of proteins. All-to-all modeling of discrete tokens is scalable, and allows ESM3 to be prompted with any combination of its modalities, enabling controllable generation of new proteins that respect combinations of prompts. Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - ESM3 is a frontier multimodal generative model.

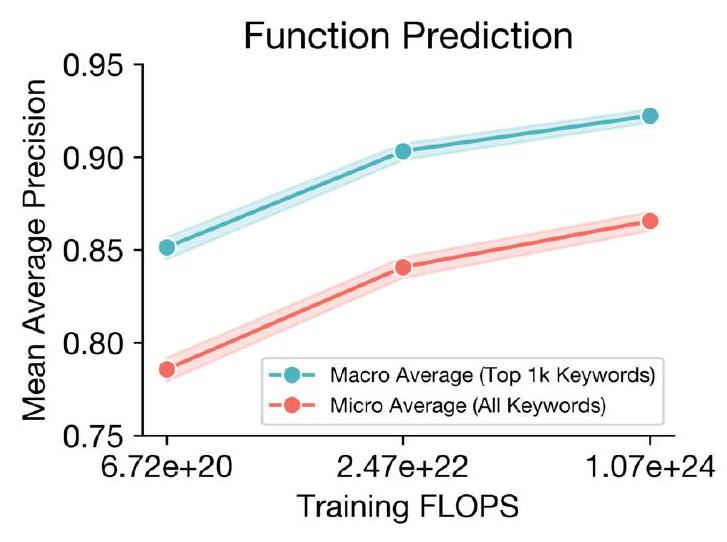

ESM3 at its largest scale was trained with $1.07 \times 10^{24}$ FLOPs on 2.78 billion proteins and 771 billion unique tokens, and has 98 billion parameters. Scaling ESM3 to this 98 billion parameter size results in improvements in the representation of sequence, structure, and function, as well as on generative evaluations. We find that ESM3 is highly responsive to prompts, and finds creative solutions to complex combinations of prompts, including solutions for which we can find no matching structure in nature. We find that models at all scales can be aligned to better follow prompts. Larger models are far more responsive to alignment, and show greater capability to solve the hardest prompts after alignment. Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - ESM3 was trained with $1.07 \times 10^{24}$ FLOPs.

Please estimate the cost of training this modeL

AI: To estimate the cost of training the ESM3 model with 98 billion parameters, we will rely on information provided and extrapolate using approximate costs for training deep learning models of similar scale as of 2023.

Given the significant assumptions and variability in cloud pricing, discounts, and efficiencies, this estimate is very rough. The actual cost could be lower or higher based on these factors. Additionally, advancements in technology and cloud infrastructure could also influence training costs.

We report the generation of a new green fluorescent protein (GFP) with ESM3. Fluorescent proteins are responsible for the glowing colors of jellyfish and corals (26) and are important tools in modern biotechnology (27). They share an elegant structure: an eleven stranded beta barrel with a helix that threads its center, which scaffolds the formation of a light-emitting chromophore out of the protein's own atoms. This mechanism is unique in nature-no other protein spontaneously forms a fluorescent chromophore out of its own structure-suggesting that producing fluorescence is hard even for nature. Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - A new green fluorescent protein (GFP) with ESM3 has been generated.

Our new protein, which we have named esmGFP, has $36 \%$ sequence identity to Aequorea victoria GFP, and $58 \%$ sequence identity to the most similar known fluorescent protein. Despite GFP's intense focus as a target for protein engineering over several decades, as far as we are aware, proteins this distant have only been found through the discovery of new GFPs in nature.

ME: Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - The new protein is named esmGFP.

Similar amounts of diversification among natural GFPs have occurred over predictable timescales. Understood in these terms, the generation of a new fluorescent protein at this distance from existing proteins appears to be equivalent to simulating over 500 million years of evolution.

ME: Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - Similar amounts of diversification have occurred among natural GFPs (Green Fluorescent Proteins).

ME: Please analyze this from the point of view of an reviewer expert in both LLMs and biotechnology.

AI: As an expert reviewer with a background in both large language models (LLMs) and biotechnology, the generation of a new green fluorescent protein (GFP) through the use of ESM3—presumably an advanced machine learning algorithm or platform—is both intriguing and a noteworthy advancement in the field. My analysis will cover the novelty of the approach, its technical validity, implications for the field, and potential areas for further exploration.

The development of esmGFP marks a significant achievement given its low sequence identity to Aequorea victoria GFP (36%) and yet a relatively higher identity to the closest known fluorescent protein (58%). The disparity in sequence identity with known GFPs highlights the innovative success of applying ESM3 in discovering or designing novel proteins, potentially opening new avenues for similar advancements using computational models. This approach indicates a paradigm shift from traditional experimental or evolutionary discovery processes to computational design and simulation, aligning with the growing integration of artificial intelligence (AI) in biotechnological advancements.

The claim of simulating over 500 million years of evolution is profound and necessitates a rigorous examination of the methodologies employed. The selection criteria, machine learning algorithms, datasets, and computational parameters integral to ESM3's function in generating esmGFP are critical for assessing the model's robustness and the novelty's reproducibility. Additionally, experimental validation of esmGFP's fluorescence, structural stability, and functionality compared to naturally occurring GFPs is essential to support the computational findings. This dual approach enhances the scientific credibility of AI-generated biological innovations.

The utility of GFPs in molecular and cellular biology as tags for gene expression, protein localization, and interaction studies has been transformative. An AI-driven leap in fluorescent protein engineering could expand the toolkit available to researchers, offering proteins with potentially novel properties (e.g., differing wavelengths of fluorescence, enhanced stability, or reduced toxicity). Such advancements could significantly impact molecular biology, diagnostic procedures, and therapeutic applications, pushing the boundaries of what is currently achievable.

Several areas warrant further exploration following the generation of esmGFP. Initially, understanding the structural and functional properties of esmGFP in comparison to naturally occurring counterparts would be invaluable. Investigating how esmGFP behaves in different environments and in vivo systems could highlight its potential applications and limitations. Furthermore, exploring the scalability of the ESM3-driven process for generating other proteins with desirable characteristics could revolutionize protein engineering.

Additionally, ethical considerations surrounding AI-generated biological materials must be addressed, including safety, patentability, and accessibility. As computational methods accelerate the pace of biological discoveries, establishing guidelines and regulatory frameworks will be imperative.

In summary, the creation of esmGFP through ESM3 represents a compelling intersection of computational science and biotechnology, showcasing the potential to accelerate evolutionary processes and innovate beyond natural constraints. While the technical and ethical aspects demand thorough examination, the overarching trajectory indicates a promising future for AI in advancing biotechnological research and applications. This work not only underscores the importance of interdisciplinary collaboration but also reinforces the transformative impact of computational tools in uncovering the mysteries of biology.

ME: Please consider how RFPs and how they are related to GFPs.

AI: Red fluorescent proteins (RFPs) are related to green fluorescent proteins (GFPs) in several fundamental ways, both being part of the broader family of fluorescent proteins (FPs) that are critical tools in molecular and cellular biology. The relationship between RFPs and GFPs, and their relevance to the generation of the new green fluorescent protein (esmGFP) using ESM3, offers further dimensions to the analysis from both the biotechnological and computational perspectives.

Structural Homology: GFPs and RFPs share a similar basic structure, which is the β-barrel structure wrapping around the chromophore. This conserved architecture facilitates the process where the protein absorbs light at one wavelength and emits it at another, creating fluorescence. While the chromophores within GFPs and RFPs differ in structure leading to the emission of green and red light, respectively, these differences arise from variations in the sequence that affect the post-translational modifications and the environment of the chromophore.

Evolutionary Link: GFPs and RFPs are evolutionarily related. They originated from a common ancestral protein, which has diverged through mutations and natural selection to produce proteins with different fluorescent properties. This evolutionary link is crucial when considering the diversification among natural FPs and the simulated evolution used in generating esmGFP, as it highlights the potential for discovering or designing FPs across the spectrum by mimicking or accelerating evolutionary processes through computational approaches.

Expanding the Fluorescent Protein Palette: The creation of esmGFP through ESM3 underscores the potential to extend these computational methodologies to engineer not just GFP variants but also RFPs and other FPs with desired properties, including altered fluorescence, improved stability, or enhanced brightness. By leveraging artificial intelligence and sophisticated algorithms, researchers can explore a vast space of genetic sequences that natural evolution might not have accessed, potentially creating a diverse palette of FPs for advanced imaging techniques.

Biotechnological and Research Applications: GFPs and RFPs are foundational in biotechnology for tagging proteins, tracking gene expression, visualizing cellular processes, and more. By designing novel GFPs (like esmGFP) and extending these efforts to RFPs and beyond, researchers can develop more sensitive and versatile tools for real-time imaging and diagnostic applications, enabling the study of biological processes with unprecedented resolution and spectral range.

Interdisciplinary Collaboration: The evolution from traditional cloning and harvesting of FPs from natural sources to the computational generation of esmGFP illuminates the importance of interdisciplinary collaboration. Combining computational biology, machine learning, genetic engineering, and biochemistry is essential for the next wave of discoveries in the fluorescent protein domain.

The interconnectedness between GFPs and RFPs, through structural, functional, and evolutionary parallels, enriches the significance of developing esmGFP using a computational approach like ESM3. This strategy not only presents a platform for the innovative generation of FPs but also paves the way for a broadened understanding of protein engineering, offering exciting possibilities for research and practical applications in biotechnology and beyond.

ESM3 reasons over the sequence, structure, and function of proteins. All three modalities are represented by tokens, and are input and output as separate tracks that are fused into a single latent space within the model. ESM3 is trained with a generative masked language modeling objective:

ME: Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - ESM3 reasons over three main aspects: the sequence, structure, and function of proteins.

$$ \mathcal{L}=-\mathbb{E}{x, m}\left[\frac{1}{|m|} \sum{i \in m} \log p\left(x{i} \mid x{\backslash m}\right)\right] $$

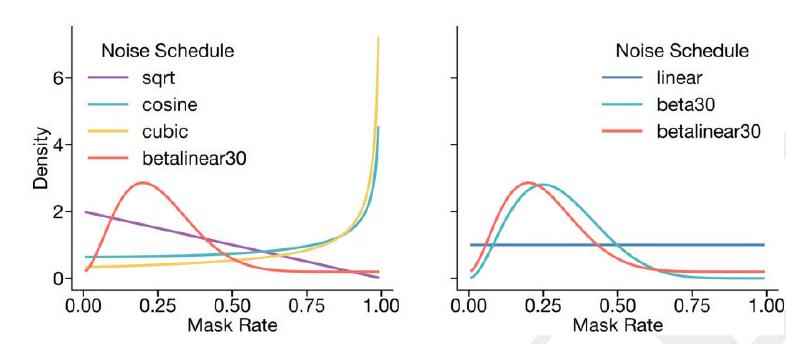

A random mask $m$ is applied to the tokens $x$ describing the protein, and the model is supervised to predict the identity of the tokens that have been masked. During training, the mask is sampled from a noise schedule so that ESM3 sees many different combinations of masked sequence, structure, and function, and predicts completions of any combination of the modalities from any other. This differs from the classical masked language modeling (28) in that the supervision is applied across all possible masking rates rather than a single fixed masking rate. This supervision factorizes the probability distribution over all possible predictions of the next token given any combination of previous tokens, ensuring that tokens can be generated in any order from any starting point (29-31).

ME: Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - A random mask $m$ is used on the protein tokens $x$.

To generate from ESM3, tokens are iteratively sampled. Starting from a sequence of all mask tokens, tokens can be sampled one at a time, or in parallel, in any order, until all tokens are fully unmasked (Fig. 1A). Masking is applied independently to sequence, structure, and function tracks, which enables generation from any combination of empty, partial, or complete inputs. ESM3's training objective is also effective for representation learning. We choose a noise schedule that balances generative capabilities with representation learning (Appendix A.2.2). Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - ESM3 uses an iterative sampling method for token generation.

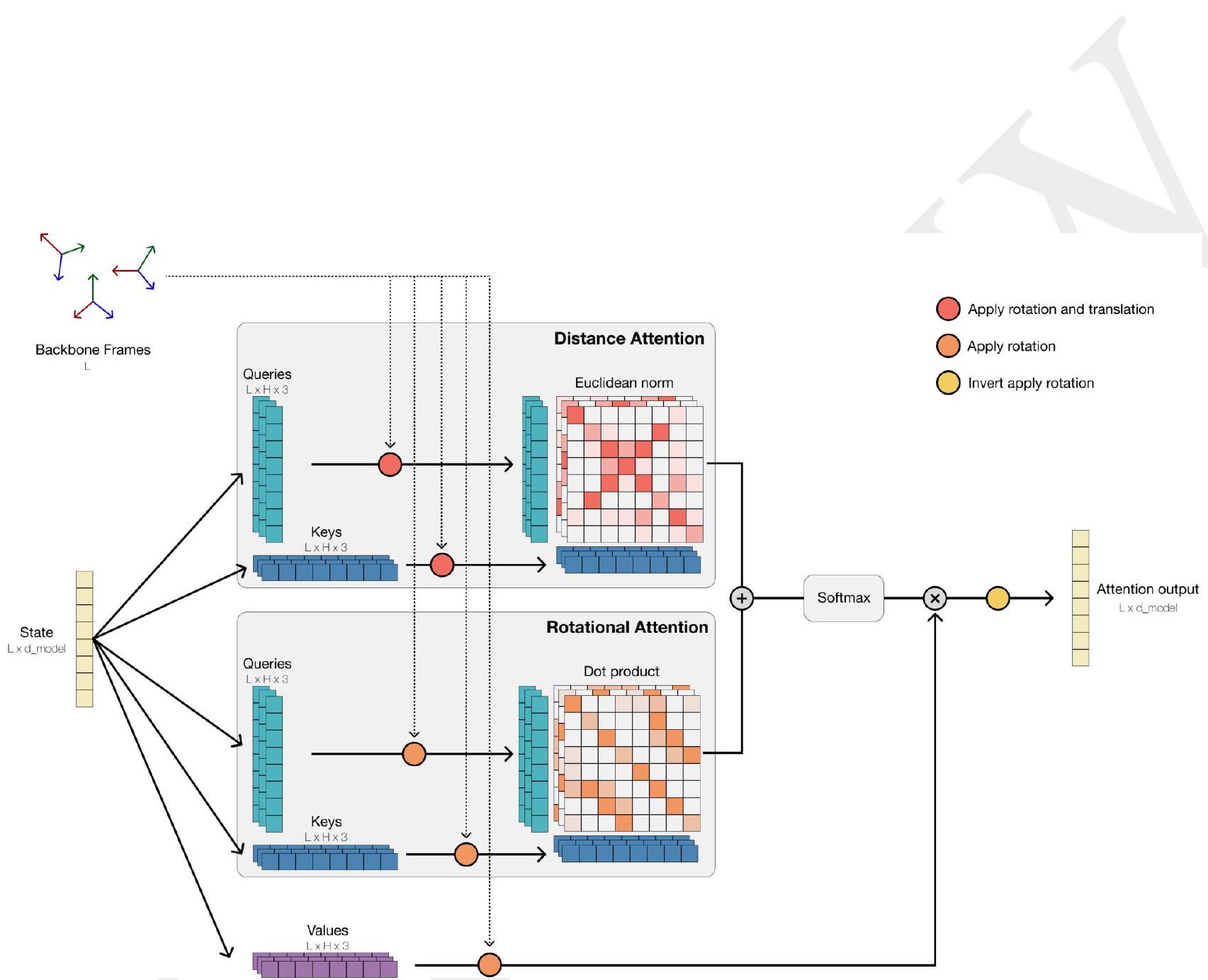

Tokenization enables efficient reasoning over structure. Protein structures are tokenized by a discrete auto-encoder (32), which is trained to compress the high dimensional space of three-dimensional structure into discrete tokens (Fig. 1C). We propose an invariant geometric attention mechanism to efficiently process three-dimensional structure. The mechanism operates in local reference frames defined by the bond geometry at each amino acid, and allows local frames to interact globally through a transformation into the global frame (Appendix A.1.6). This mechanism can be efficiently realized through the same computational primitives as attention (33), and is readily scalable. The local structural neighborhoods around each amino acid are encoded into a sequence of discrete tokens, one for each amino acid.

ME: Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - Tokenization helps in efficient reasoning over structure.

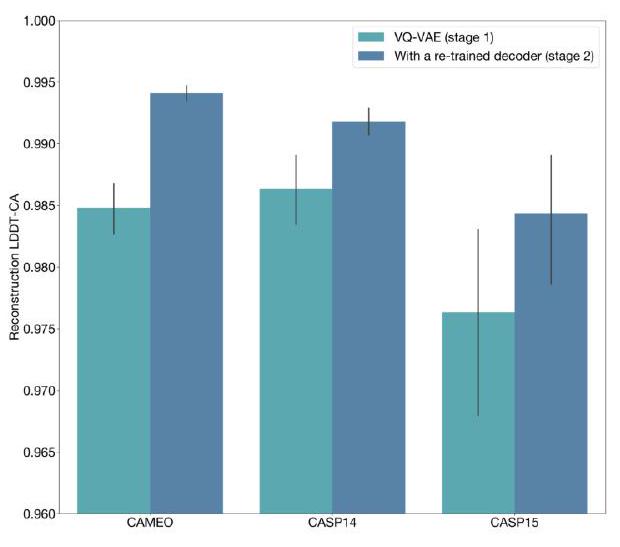

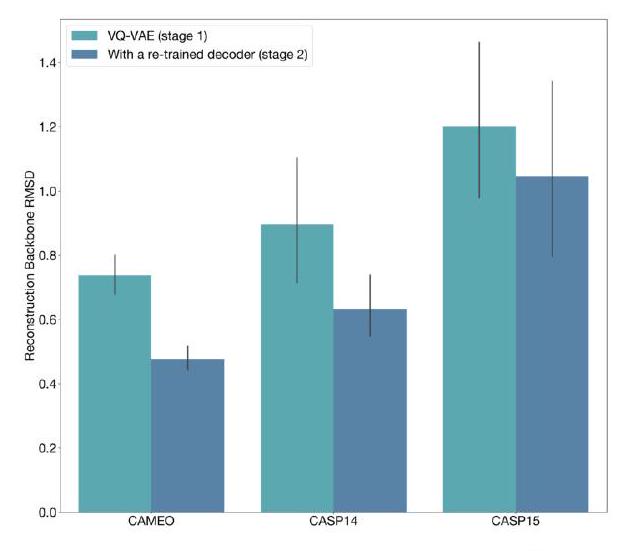

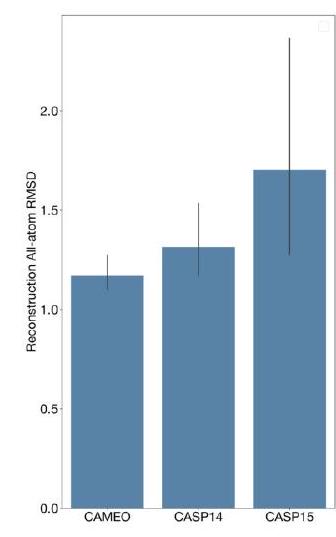

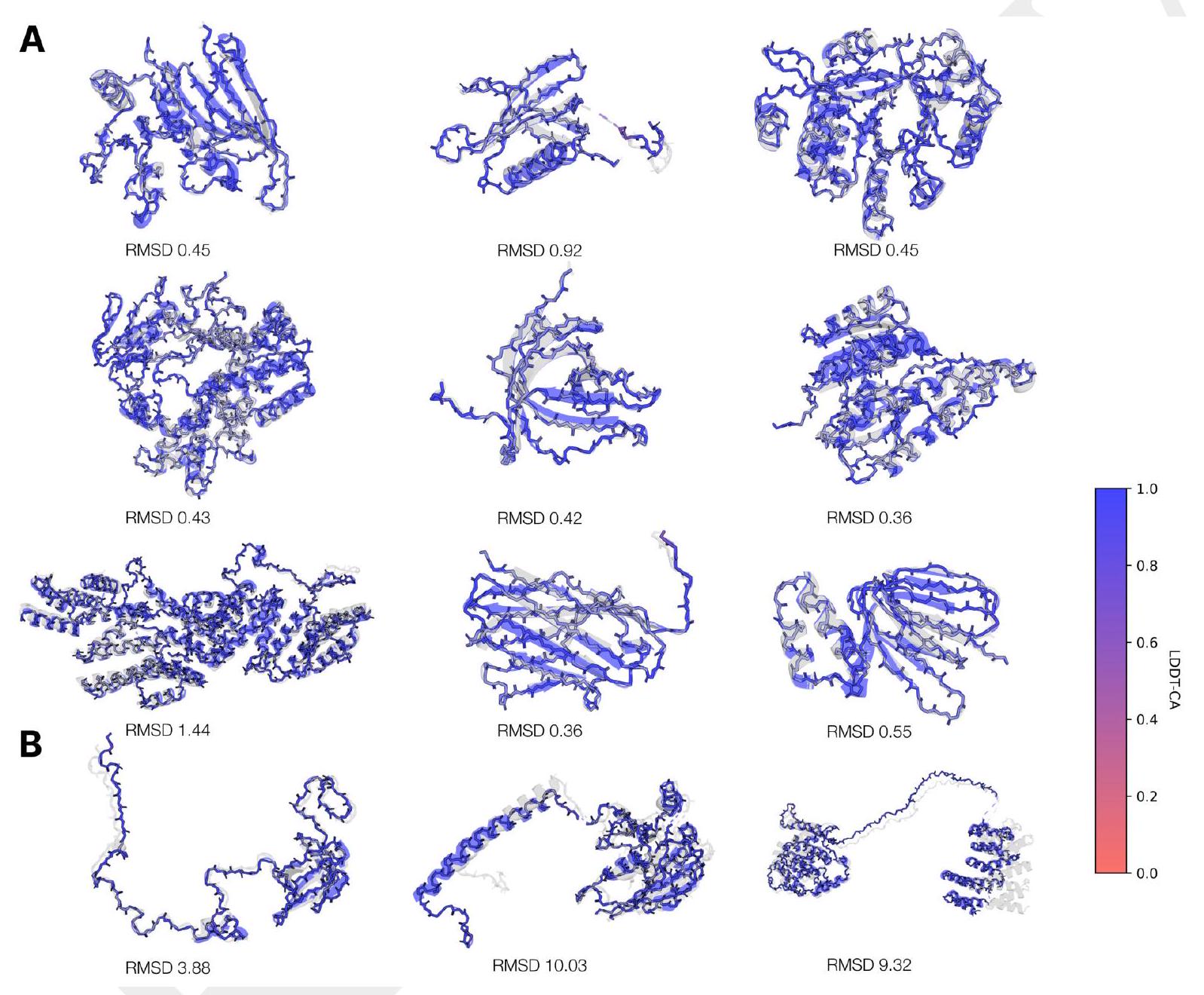

When predicting or generating protein structure, structure tokens output by ESM3 are passed to the decoder, which reconstructs the all-atom structure. The autoencoder is trained to encode and reconstruct atomic coordinates with a geometric loss that supervises the pairwise distances and relative orientations of bond vectors and normals (Appendix A.1.7.3.1). This tokenization delivers nearperfect reconstruction of protein structure ( $<0.3 \AA$ RMSD on CAMEO, Fig. S3), enabling representation of structure at the input and output with atomic accuracy. Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - Structure tokens output by ESM3 are used in the prediction or generation of protein structures.

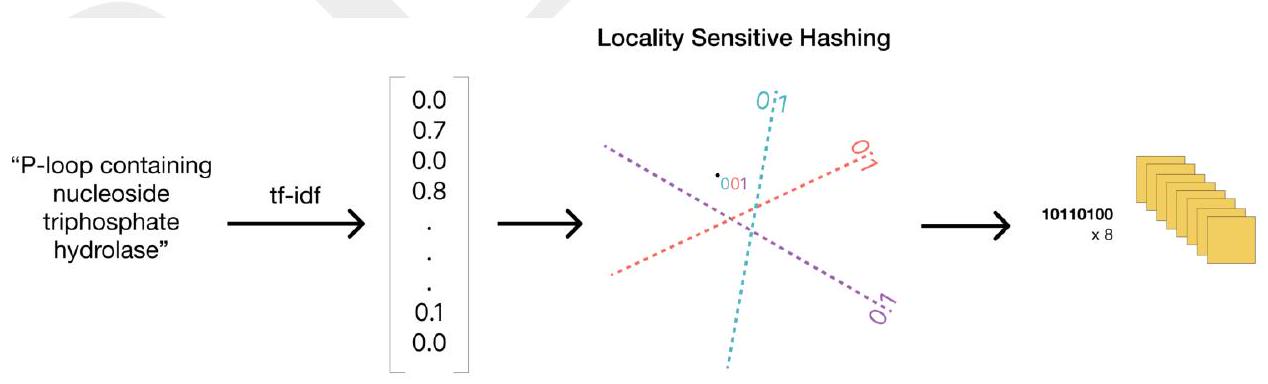

We also find that providing ESM3 direct access to atomic coordinates in the input via a geometric attention projection into the transformer improves the response to atomic coordinate prompts. ESM3 can be conditioned on either or both of tokenized structure and atomic coordinates. We supplement these structure representations with coarse grained tokens encoding secondary structure state (SS8) and solvent accessible surface area (SASA). Function is presented to the model in the form of tokenized keyword sets for each position in the sequence. Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - ESM3's ability to respond to atomic coordinate prompts is enhanced by providing it with direct access to atomic coordinates through a geometric attention projection.

ESM3 is a bidirectional transformer. While extensive research has gone into creating specialized architectures and training objectives for proteins, we find that tokenization paired with a standard masked language modeling objective and the basic transformer architecture is highly effective for both representation learning and generative modeling. Sequence, structure, and function tracks are input as tokens, which are embedded and fused, then processed through a Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - ESM3 is a bidirectional transformer.

Please explain to a person without a background in LLMs

AI: Certainly! Let's delve into this complex topic in a way that doesn't require prior knowledge of Large Language Models (LLMs) or specialized biological knowledge.

What is ESM3?

ESM3 is essentially a sophisticated computer program designed to understand and predict various aspects of proteins - which are crucial molecules for life. It can look at the sequence of a protein (the order of its building blocks), its three-dimensional structure, and its function (what it does in the body) in a unique and interconnected way. Think of ESM3 as a very smart biologist that has been trained to analyze proteins in great depth.

How does it work?

The way ESM3 learns is similar to someone trying to complete a puzzle with some of the pieces hidden - it tries to guess the missing pieces based on the overall picture. In technical terms, it gets trained by being shown proteins with some parts 'masked' or hidden, and it learns by trying to predict these hidden parts. This is done over and over with different parts hidden each time, which helps it understand how proteins are built and function at a deep level.

Why is it special?

Understanding Proteins Holistically: ESM3 considers the sequence, structure, and function of proteins all at once. Imagine you only knew what different car parts looked like but had no idea how these parts fit together or what they do. ESM3, on the other hand, understands not only what the parts are but also how they come together and what they're supposed to do - a much fuller understanding of the 'mechanics' of life at the molecular level.

Flexibility in Learning and Prediction: It's trained to predict any part of the protein from any other part, giving it a flexible understanding. This means it can work with incomplete information to make predictions, much like filling in missing words in a sentence based on the context provided by the rest of the sentence.

Protein Structure: Understanding the 3D shape of proteins is crucial because a protein's shape determines its role and function. ESM3 is trained to 'visualize' and predict this structure by learning from thousands of known protein shapes. It does this by breaking down the complex shape into simpler, easier-to-understand pieces.

High Accuracy: This artificial intelligence can predict the structure and function of proteins with remarkable precision, aiding scientists in understanding how different proteins work and interact - potentially accelerating drug discovery and the understanding of diseases.

In essence, ESM3 is like a highly advanced tool for scientists, helping them decipher the complex language of proteins much faster and more accurately than traditional methods. It's a blend of biology, computer science, and mathematics, all working together to unlock the secrets of life at the molecular level.

ME: Please explain for an expert in LLMs

AI: For an expert in Large Language Models (LLMs), delving into the intricacies of ESM3 offers a fascinating insight into the application of LLM methodologies to the domain of protein sequences, structures, and functions. ESM3 represents an avant-garde approach in blending traditional natural language processing (NLP) techniques with biological data to address complex bioinformatics challenges.

Model Architecture and Training Regime

At its core, ESM3 leverages a bidirectional transformer architecture, akin to those used in leading LLMs like BERT or GPT, but with critical modifications tailored to handle the multimodal nature of protein data. The model ingests sequences as tokens, analogous to words or characters in NLP, but these tokens represent amino acids, structure descriptors, or functional attributes rather than textual information.

The training objective is a generative masked language modeling (MLM) task, yet it innovates by incorporating a dynamic masking strategy. Instead of a static mask rate (e.g., masking 15% of tokens as in BERT), ESM3 employs a varied masking approach where the mask ratio changes, allowing the model to be challenged with a wider range of prediction tasks during training. This dynamic masking encourages a more robust internal representation of protein-related data, enhancing the model's ability to interpolate or extrapolate from given sequences, structural data, or functional annotations.

Tokenization of Structure and Function

A unique aspect of ESM3 is how it deals with protein structure. Utilizing a discrete autoencoder, the model compresses 3D structural information into discrete tokens. This process necessitates an efficient method to capture the complexity of protein structures in a format that's amenable to transformer-based models. The introduction of an invariant geometric attention mechanism, which enables the model to interpret 3D structures in their local spatial contexts and integrate these insights globally, is particularly innovative. This mechanism mirrors attention mechanisms in LLMs but adapts them to handle the inherently geometric nature of protein data.

For functional data, ESM3 incorporates tokenized keyword sets, allowing the model to understand and predict the functional aspects of proteins based on amino acid sequences and structural configurations. This multimodal tokenization strategy facilitates a comprehensive understanding across sequence, structure, and function.

Generative Capabilities and Representation Learning

ESM3's generative capabilities are highlighted by its ability to predict missing information across any of the input modalities, whether starting from scratch or from partial inputs. This aspect is particularly important for tasks such as de novo protein design or function prediction, where incomplete information must often be reasoned into coherent wholes.

The balance it strikes between generative capabilities and representation learning is notable. By selecting an appropriate noise schedule, ESM3 improves both its predictive power and the quality of the latent representations it learns. This dual capacity makes it not just a tool for generating predictions but also a powerful method for exploratory data analysis and hypothesis generation in the protein space.

Conclusion

For LLM experts, ESM3 represents an exciting exploration into how the principles underlying successful language models can be extended beyond text to tackle problems in biological sequence, structure, and function prediction. Its training methodology, attention to the uniqueness of protein structures, and innovative use of tokenization position ESM3 as a state-of-the-art model that pushes the boundaries of what's possible with LLM technologies in the life sciences.

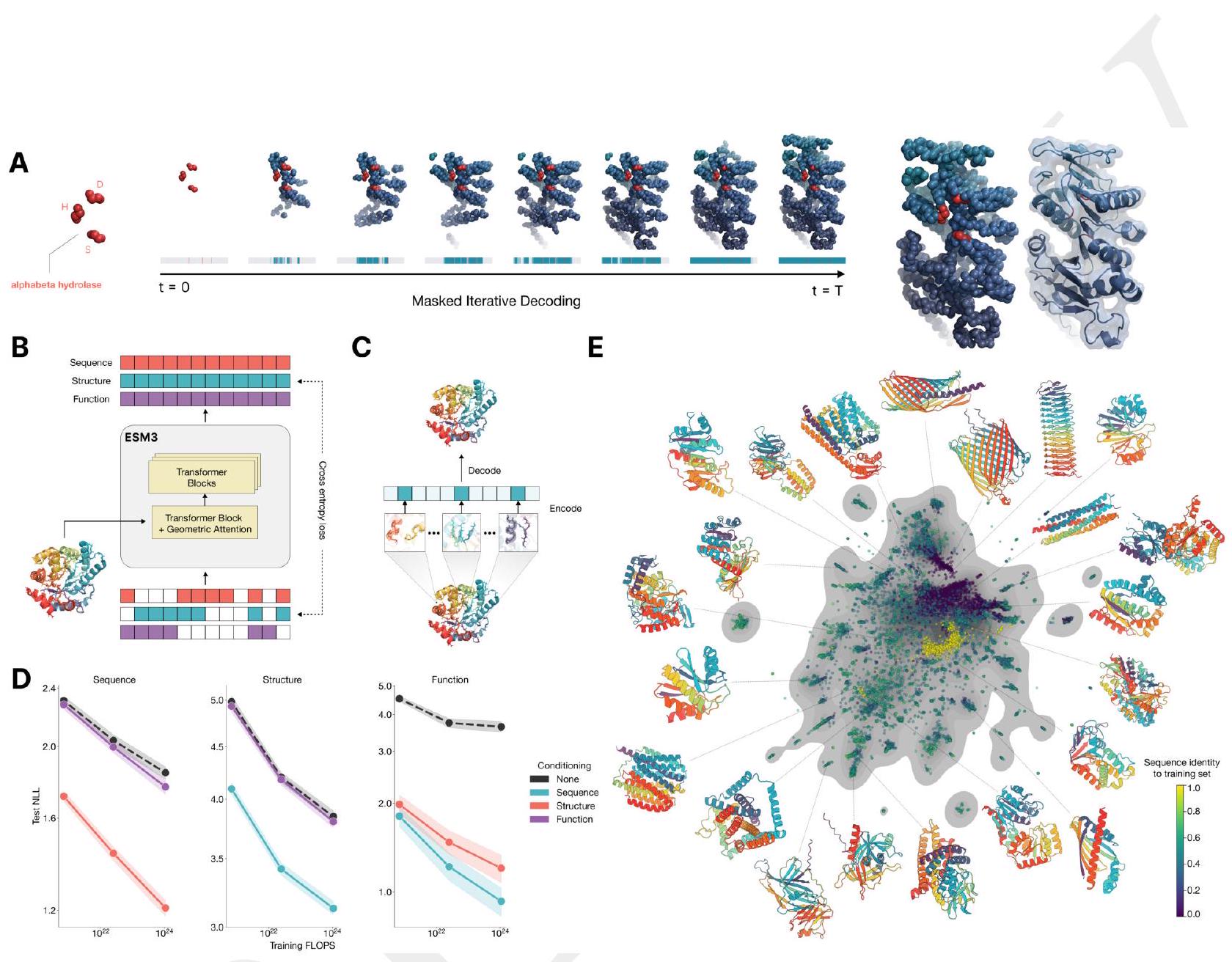

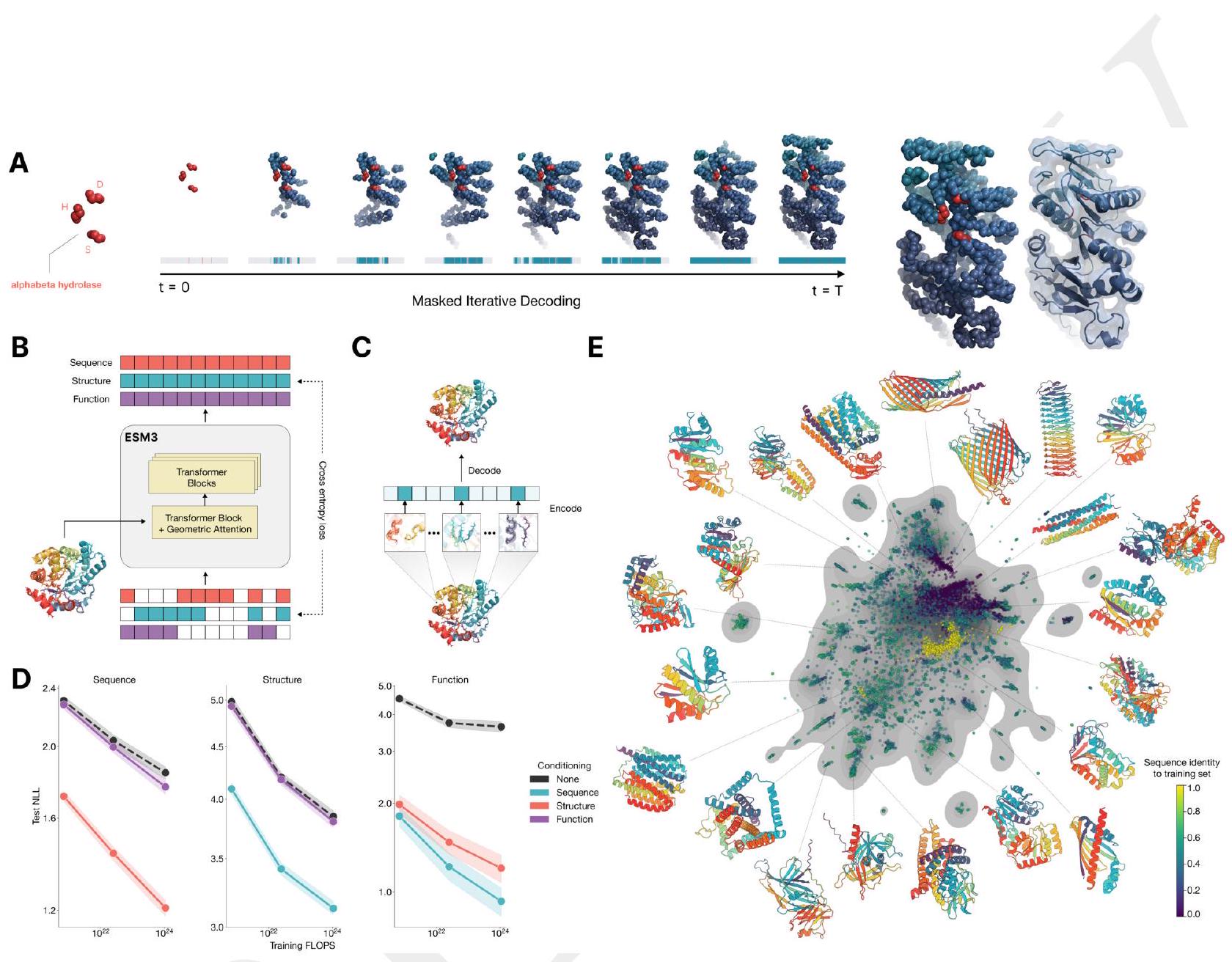

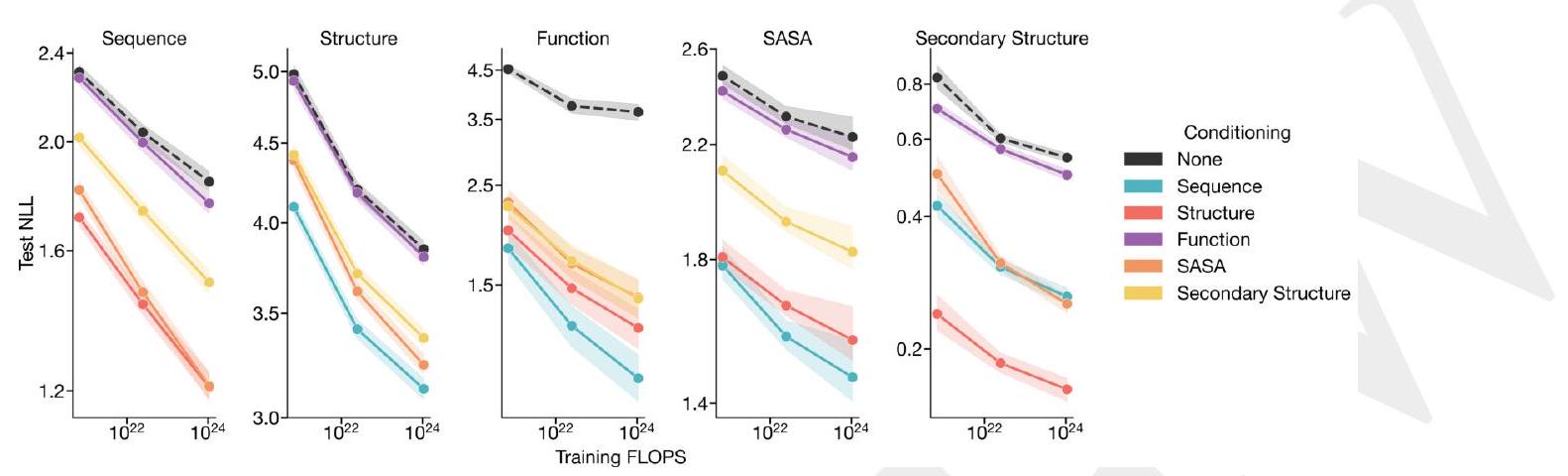

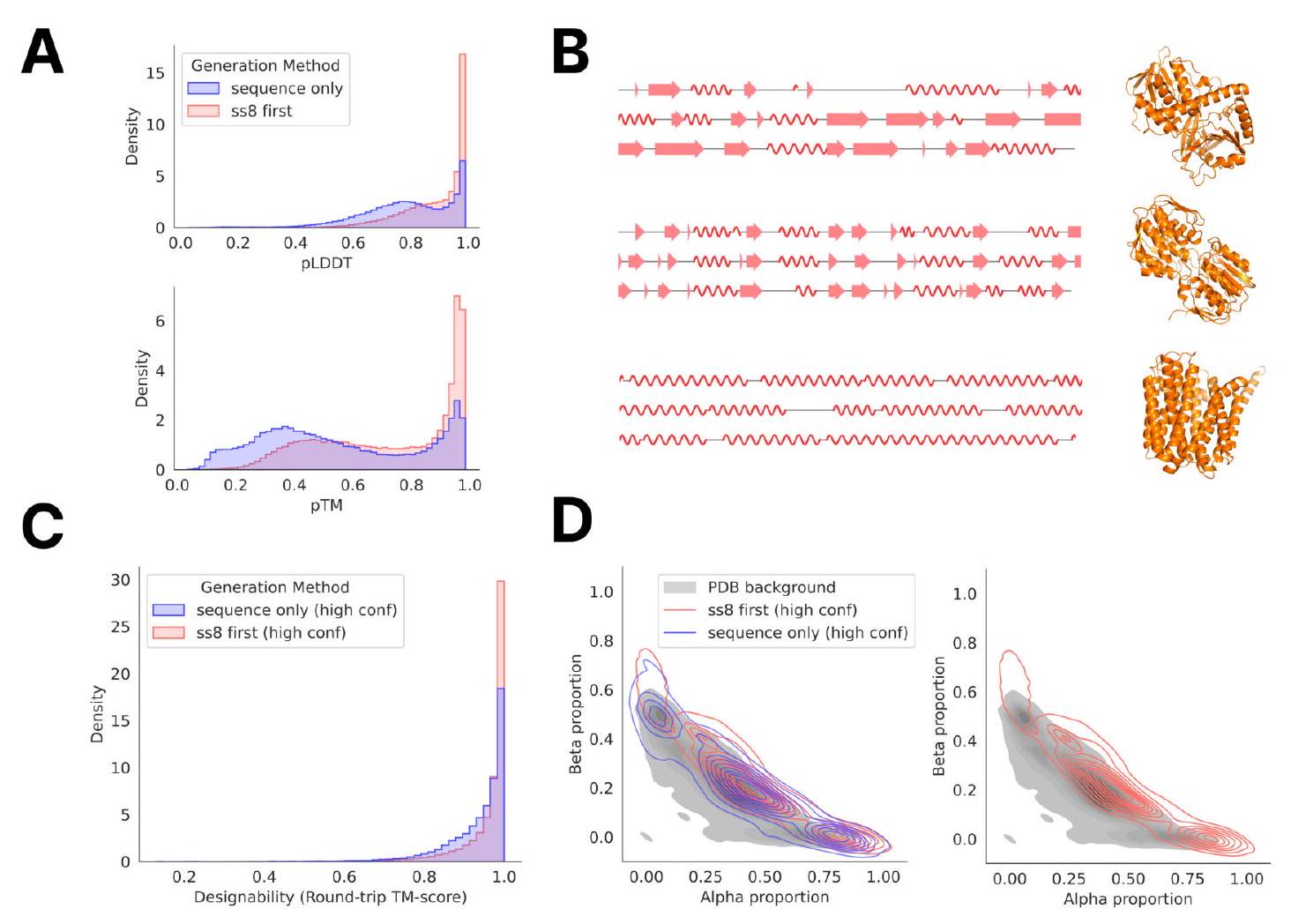

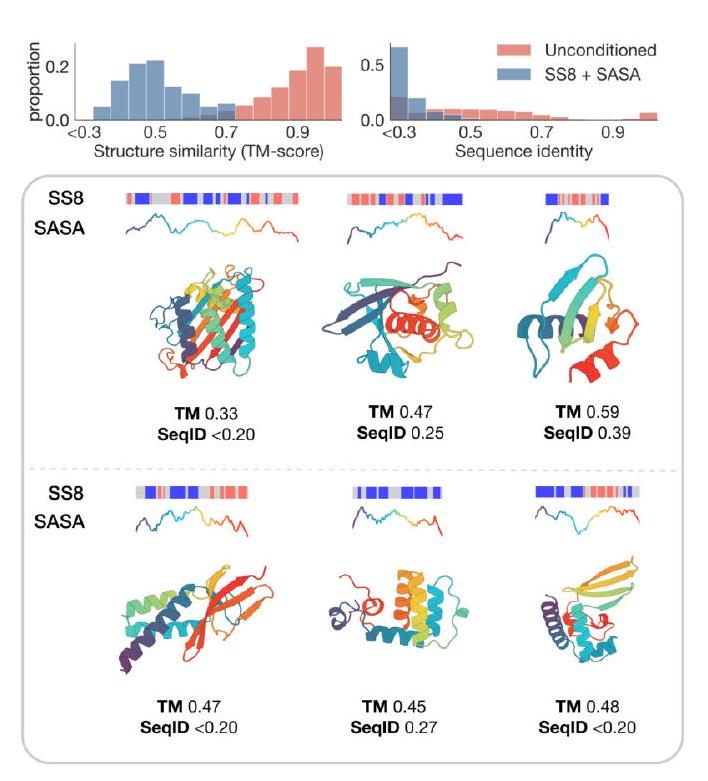

Figure 1. ESM3 is a generative language model that reasons over the sequence, structure, and function of proteins. (A) Iterative sampling with ESM3. Sequence, structure, and function can all be used to prompt the model. At each timestep $\mathrm{t}$, a fraction of the masked positions are sampled until all positions are unmasked. (B) ESM3 architecture. Sequence, structure, and function are represented as tracks of discrete tokens at the input and output. The model is a series of transformer blocks, where all tracks are fused within a single latent space; geometric attention in the first block allows conditioning on atomic coordinates. ESM3 is supervised to predict masked tokens. (C) Structure tokenization. Local atomic structure around each amino acid is encoded into tokens. (D) Models are trained at three scales: 1.4B, 7B, and 98B parameters. Negative log likelihood on test set as a function of training FLOPs shows response to conditioning on each of the input tracks, improving with increasing FLOPs. (E) Unconditional generations from ESM3 98B (colored by sequence identity to the nearest sequence in the training set), embedded by ESM3, and projected by UMAP alongside randomly sampled sequences from UniProt (in gray). Generations are diverse, high quality, and cover the distribution of natural sequences. stack of transformer blocks. The first transformer block also includes a geometric attention layer for atomic structure coordinate conditioning. At the output of the model, shallow MLP heads project the final layer representation into token probabilities for each of the tracks. Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - ESM3 is a generative language model designed for predicting protein sequences, structures, and functions.

The largest ESM3 model is trained on 2.78 billion natural proteins derived from sequence and structure databases (2, 34-37). As a small fraction of structures have been experimentally determined relative to sequences, we leverage predicted structures $(4,5)$. We also generate synthetic sequences with an inverse folding model (described in Appendix A.2.1.3) for all structures, including predicted ones. Function keywords are derived by predicting functional annotations from sequence using a library of hidden markov models (38). Overall this increased training data to 3.15 billion protein sequences, 236 million protein structures, and 539 million proteins with function annotations, totaling 771 billion unique tokens. Full details of the training dataset are described in Appendix A.2.1.8. Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - The largest ESM3 model is trained on 2.78 billion natural proteins.

Please analyze this paragraph from the point of view of an expert scientist and be critical of any omissions or possible sources of problems you see.

AI: Analyzing this paragraph from the perspective of an expert scientist specializing in computational biology and protein modeling reveals several points of interest and potential areas for critique:

The text notes that the model leverages predicted structures due to the relatively small number of experimentally determined structures. While advances in protein structure prediction, notably those achieved by tools like AlphaFold, have significantly reduced the gap between predicted and experimental structures, there are inherent inaccuracies and biases in prediction models that can propagate through the ESM3 model. This reliance on predicted structures could introduce systemic errors or biases, particularly for proteins with novel folds or those from less-studied organisms.

The generation of synthetic sequences through an inverse folding model is an innovative way to enhance the training data. However, the quality and diversity of these synthetic sequences are crucial. There is a risk that the synthetic sequences may not accurately reflect natural protein diversity, especially for proteins from extremophiles or those undergoing rapid evolution. The method's robustness in generating realistic and biologically plausible sequences should be scrutinized.

Protein function prediction using a library of hidden Markov models is a well-established method. However, functional annotations in databases often suffer from incompleteness, errors, or biases toward well-studied proteins. The approach to predicting function keywords from sequences may also inherit these biases, affecting the model's ability to accurately predict functions for less-characterized or novel proteins.

While the large scale of the dataset (3.15 billion protein sequences, 236 million structures, and 539 million function annotations) is impressive, the quality of this data is as critical as its quantity. The paragraph does not detail the steps taken to ensure data quality, such as filtering out redundant or low-quality sequences and structures, which could significantly impact the model's performance.

The diversity of the training dataset directly influences the model's generalizability. The paragraph does not mention the taxonomic or environmental diversity of the source data. Models trained predominantly on data from model organisms may not perform as well on sequences from less-studied species.

The paragraph focuses on the dataset's preparation and lacks discussion on how the model's performance was evaluated, particularly concerning the novel synthetic sequences and predicted functions. Insights into the validation methods, such as cross-validation with experimentally determined structures and functions, would be vital to assess the reliability of the model's predictions.

Given the model's extensive training data, there is a potential risk of overfitting, where the model may excel at predicting features of the training data but perform poorly on unseen data. Strategies to mitigate overfitting, such as regularization techniques or training with a diverse set of sequences, should be examined.

Finally, while not a technical critique, it's important to consider the ethical implications of creating and using powerful predictive models like ESM3. Issues around data privacy, consent (particularly for human-derived proteins), and potential misuse for creating harmful biological agents should be addressed.

In summary, while the development of the ESM3 model represents a significant advance in protein structure and function prediction, the methodologies employed warrant close scrutiny to ensure the reliability, accuracy, and ethical use of the generated predictions and synthetic sequences.

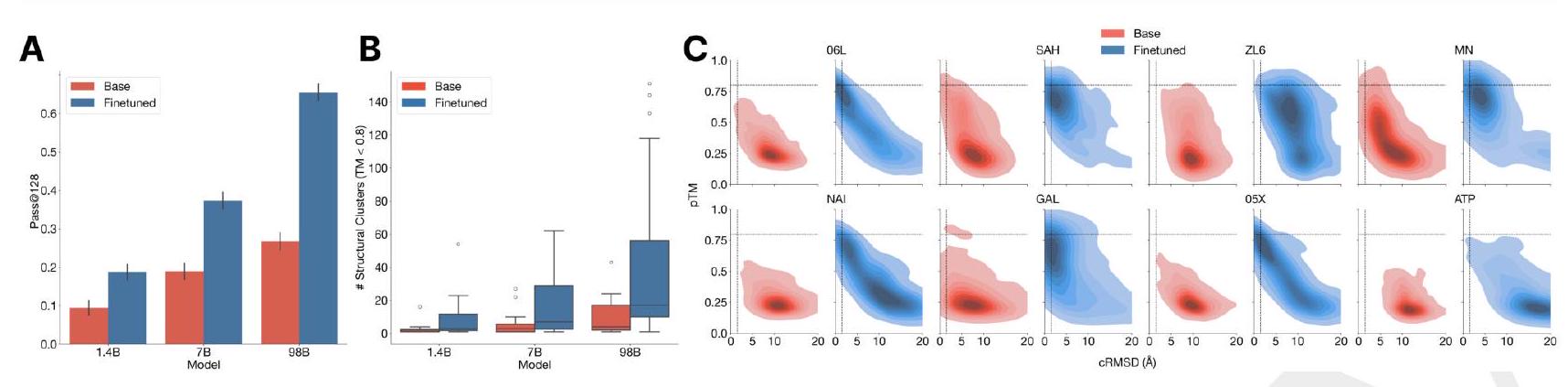

We train ESM3 models at three scales: 1.4 billion, 7 billion, and 98 billion parameters. In an initial series of experiments to evaluate representation learning performance in response to architecture hyperparameters, we find a greater response to increasing depth than to width. This informed the choice of relatively deep networks for the final architectures, with the 98 billion parameter model incorporating 216 Transformer blocks (Appendix A.1.5). Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - The ESM3 models were trained at three different scales: 1.4 billion, 7 billion, and 98 billion parameters.

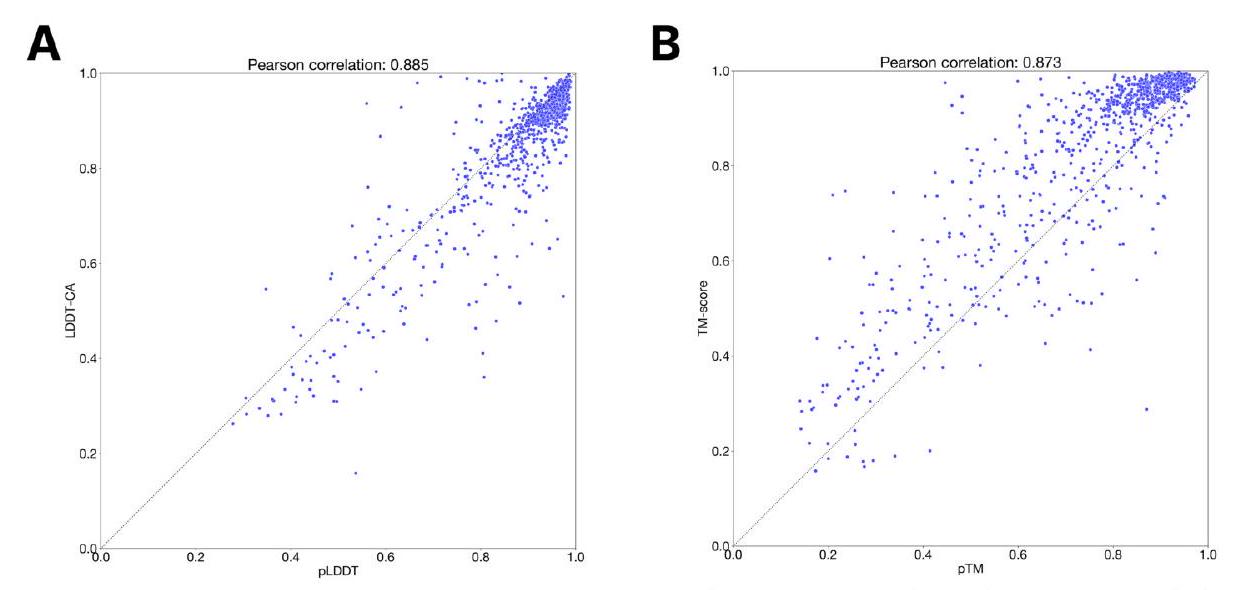

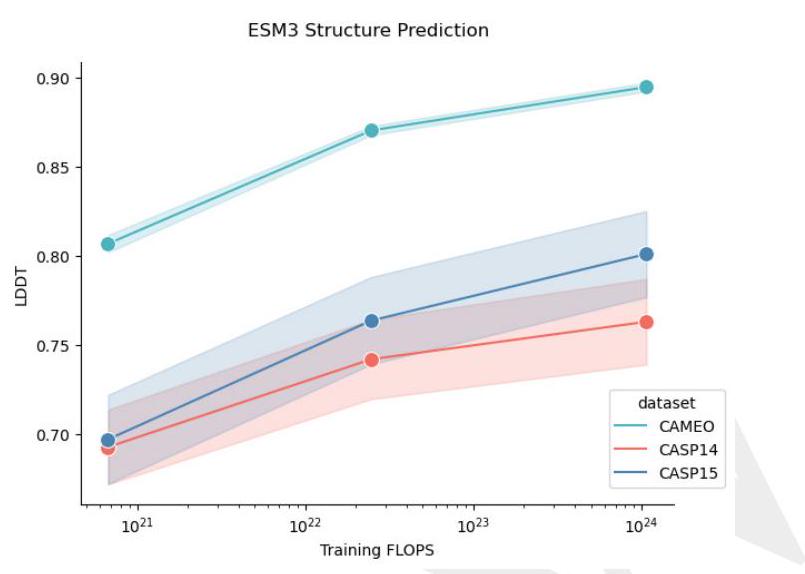

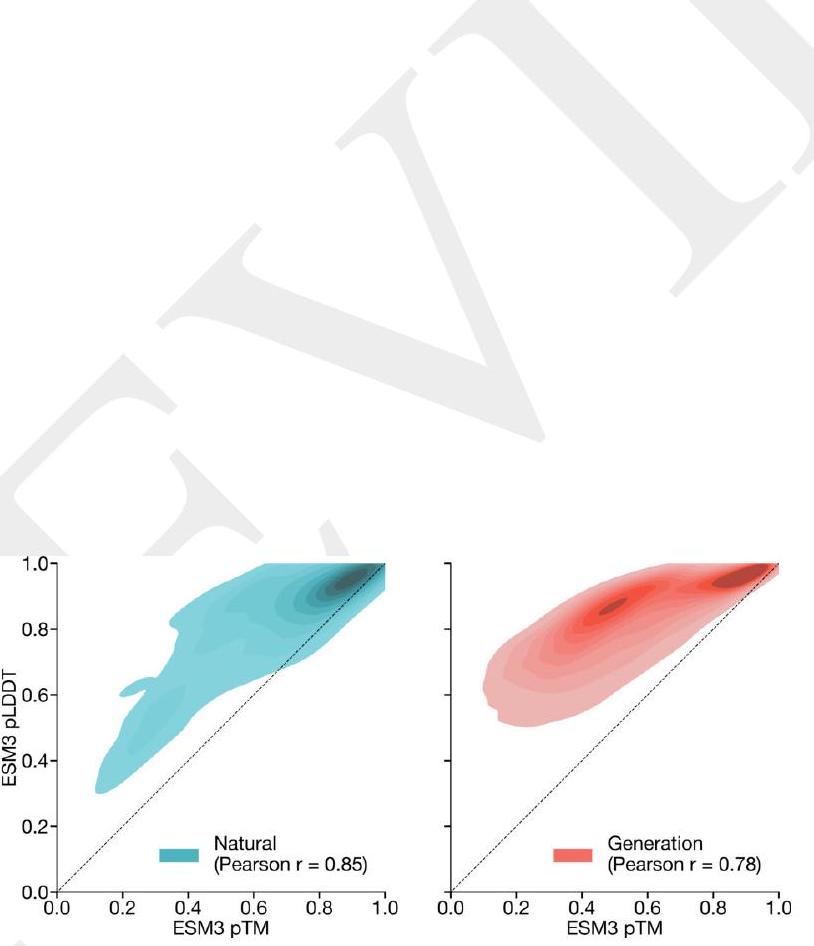

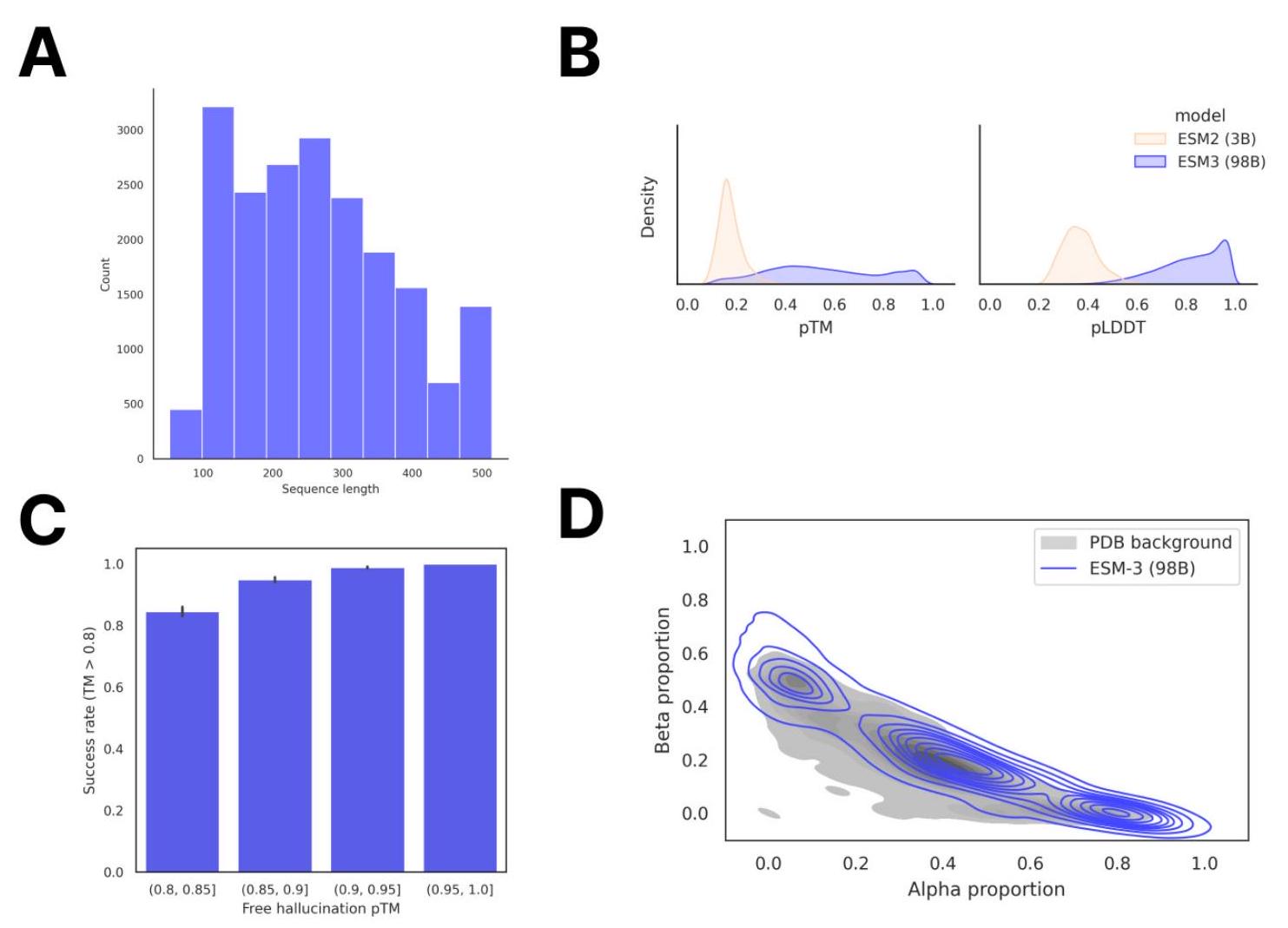

Scaling ESM3 from 1.4 billion to 98 billion parameters results in substantial improvements in the validation loss for all tracks, with the greatest improvements observed in sequence loss (Fig. 1D, Fig. S11). These gains in validation loss lead to better representation learning (Table S7 and Fig. S8). In single sequence structure prediction (Table S8) on CAMEO, ESM3 98B obtains 0.895 mean local distance difference test (LDDT) and surpasses ESMFold (0.865 LDDT). Unconditional generation produces high-quality proteins-with a mean predicted LDDT (pLDDT) 0.84 and predicted template modeling score (pTM) 0.52-that are diverse in both sequence (mean pairwise sequence identity 0.155 ) and structure (mean pairwise TM score 0.48 ), spanning the distribution of known proteins (Fig. 1E, Fig. S13). Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - Scaling ESM3 from 1.4 billion to 98 billion parameters results in substantial improvements in the validation loss for all tracks.

Please analyze this paragraph from the point of view of an expert scientist and be critical of any omissions or possible sources of problems you see.

AI: Analyzing the paragraph provided from the viewpoint of an expert scientist, a number of critical reflections and potential issues can be brought up regarding omissions and sources of problems related to the dramatic scaling of parameters in the ESM3 model from 1.4 billion to 98 billion, as well as the results discussed therein.

Computational Resources and Accessibility: The increase in parameters from 1.4 billion to 98 billion undoubtedly requires significant computational resources. This might limit the accessibility of such a model to a few well-funded laboratories or institutions, curbing the democratization of advanced computational tools in the scientific community. There's an omission of any discussion on the computational costs and energy consumption, which are essential factors to consider given the increasing awareness of the environmental impact of large-scale computations.

Overfitting Risk: While the paragraph notes substantial improvements in validation loss, there is no mention of how the model performs on completely unseen data, which is a standard measure to assess if the model generalizes well or if it is overfitting. The mention of "validation loss" improvements does not necessarily imply that the model will perform equally well in real-world applications or on datasets that were not part of the training/validation sets.

Underrepresented Data: The scaling of parameters typically enables a model to learn finer details and nuances in the data. However, it can also exacerbate biases towards overrepresented features in the training set. The paragraph omits any discussion on the diversity and representativeness of the training dataset, which are crucial for assessing the reliability and applicability of the model's predictions across diverse biological entities.

Statistical Significance and Error Margins: The increases in performance metrics such as LDDT and TM scores are mentioned without any accompanying statistical analysis data. For a scientifically rigorous evaluation, it would be essential to know the confidence intervals or p-values associated with these improvements to understand if the differences are statistically significant and not due to chance variations in the dataset.

Comparison to Baselines and State of the Art: While the paragraph mentions that ESM3 98B surpasses ESMFold in single sequence structure prediction, it lacks a broader comparison to other state-of-the-art models in the field. A critical understanding of how ESM3 98B positions itself within the landscape of current bioinformatic tools and whether it addresses limitations of existing models is missing.

Diversity and Quality of Generated Proteins: The paragraph asserts that the model produces high-quality, diverse proteins but does not specify how the diversity and quality were assessed or benchmarked. The mean pairwise sequence identity and TM score are given, yet there is no contextualization of these figures within existing literature or experimental validation of the produced structures.

The paragraph omits any discussion on the ethical and practical implications of deploying a substantially more powerful model like ESM3 98B. For instance, issues related to biosecurity, the potential creation of harmful proteins, or the monopolization of cutting-edge tools by a few entities are not addressed.

In summary, while the scaling of the ESM3 model to 98 billion parameters appears to offer significant improvements in certain metrics, a critical analysis suggests several areas where additional information, broader context, and a more rigorous scrutiny of the results and their implications would be necessary to fully evaluate the advancements and their potential impact on the field of bioinformatics and beyond.

We explore the ability of ESM3 to follow complex prompts with different compositions. ESM3 can be prompted with instructions from each of its input tracks: sequence, structure coordinates, secondary structure (SS8), solvent-accessible surface area (SASA), and function keywords. This allows prompts to be specified at multiple levels of abstraction, from atomic level structure to high level keywords describ- ing the function and fold topology, using the learned generative model to find a coherent solution that respects the prompt. Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - ESM3 can be prompted with instructions from various input tracks.

Please analyze this paragraph from the point of view of an expert scientist and be critical of any omissions or possible sources of problems you see.

AI: The paragraph provides an overview of the capabilities of ESM3, ostensibly a computational model designed for protein prediction tasks, leveraging multi-input tracks such as sequence, structure coordinates, secondary structure (SS8), solvent-accessible surface area (SASA), and function keywords. The claim is that ESM3 can follow complex prompts across these various dimensions to generate coherent predictions. While promising, there are several critical aspects and potential problems that need to be highlighted from an expert standpoint:

Model Generalizability: One of the core challenges in protein prediction models involves their generalizability to unseen or less common protein structures. The paragraph does not address how ESM3 handles this issue, particularly regarding rare folds or proteins from less-studied organisms. The ability of a model to generate coherent solutions across a diverse set of protein structures is vital for its application in real-world scenarios.

Data Dependency: The performance of machine learning models, such as ESM3, is highly dependent on the quality and diversity of the training data. There's no mention of the data used to train ESM3 or how issues of bias or incomplete datasets were addressed. Incomplete or biased datasets could lead to incorrect predictions, particularly for proteins that do not fall within the "typical" range of the training data.

Interpretability and Validation: While the paragraph suggests that ESM3 can integrate prompts across various levels of abstraction, it does not mention how the model's predictions can be interpreted or validated. For example, how does one assess the accuracy of the generated structure or function predictions? The ability to critically evaluate and trust the model's output is crucial for scientific and practical applications.

Computational Requirements: Models that integrate multiple input tracks and produce complex outputs tend to be computationally intensive. The paragraph does not address the computational resources required for running ESM3, which is a critical factor for its accessibility and use in different environments, ranging from academic labs to industry settings.

Handling of Dynamic and Flexible Regions: Proteins are dynamic entities, and their function often depends on conformational flexibility. The text does not discuss how ESM3 deals with intrinsically disordered regions or proteins that adopt multiple stable conformations, which are challenging for many prediction models.

Integration with Experimental Data: The ultimate test of any protein prediction model is its ability to generate hypotheses or predictions that can be validated experimentally. The paragraph does not mention if or how ESM3's predictions can be integrated with experimental workflows, a critical aspect for its adoption in drug discovery, functional genomics, and other areas.

Ethical Considerations and Misuse: Finally, while not directly related to the scientific capabilities of ESM3, any powerful predictive tool raises questions regarding ethical use, potential misuse, and the consequences of incorrect predictions. These aspects are increasingly important to address in the development and deployment phases of computational tools.

In summary, while ESM3 appears to offer a versatile and potentially powerful tool for protein prediction, several areas require clarification and further discussion, including its generalizability, data requirements, interpretability, computational demands, handling of protein dynamics, integration with experimental validation, and broader ethical considerations.

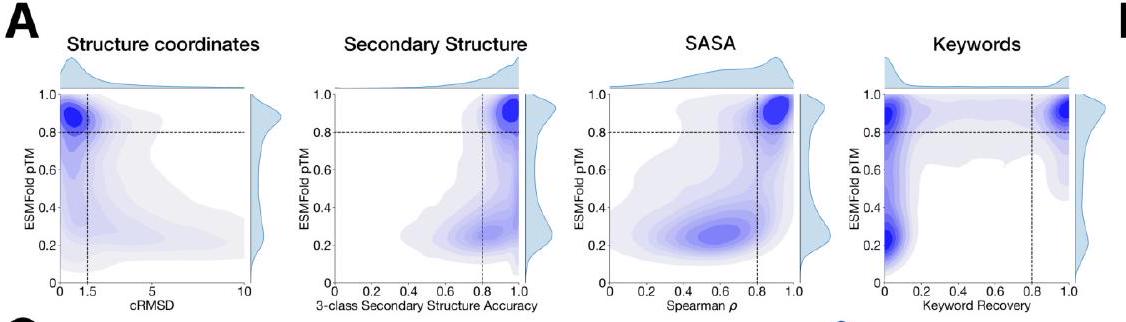

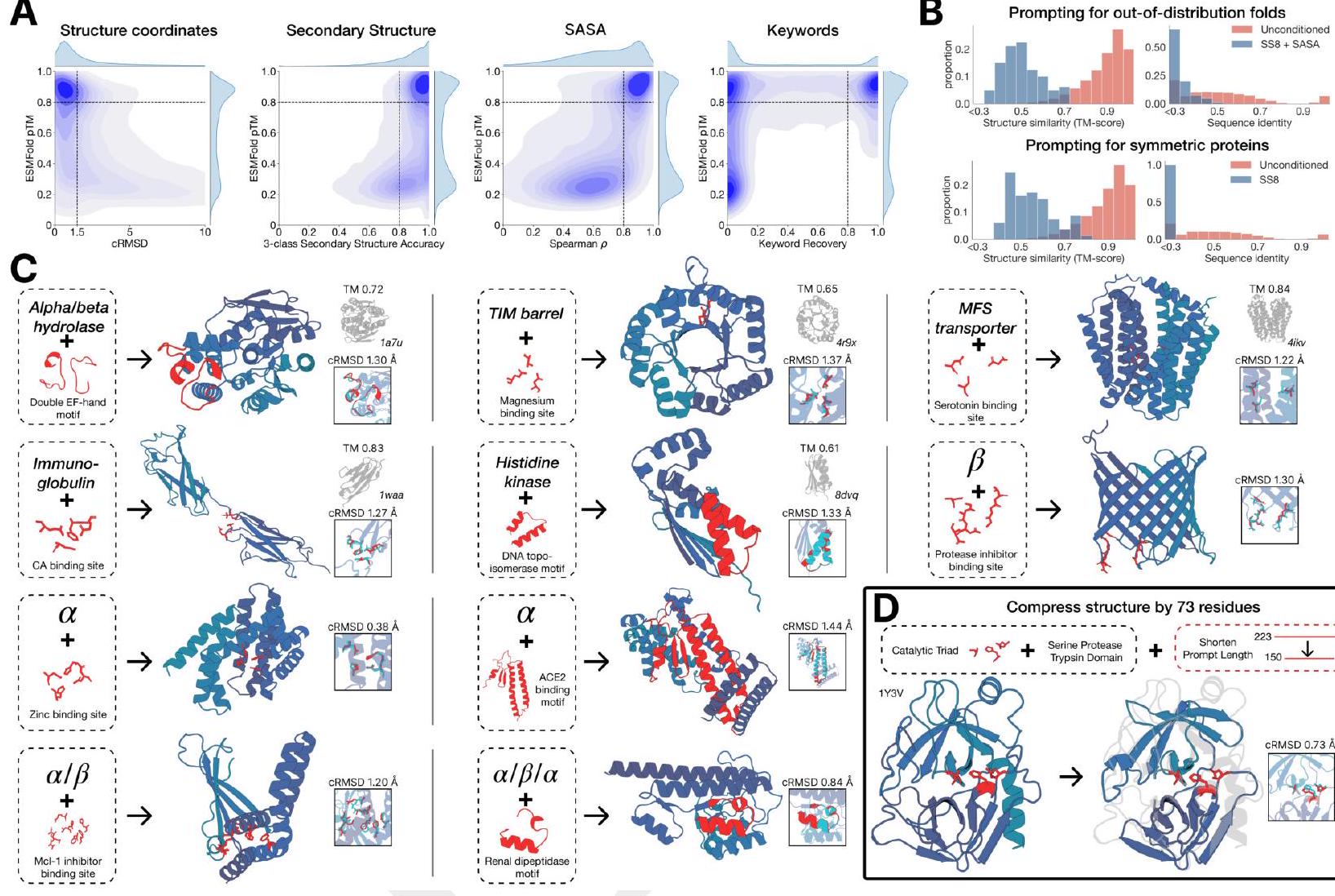

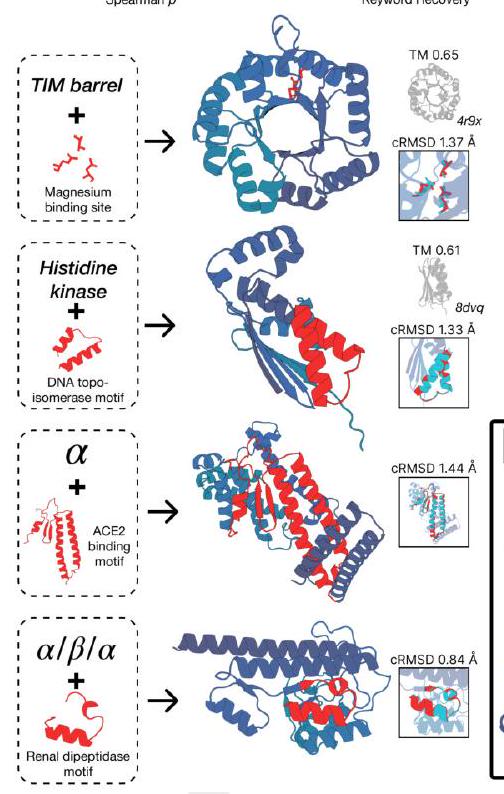

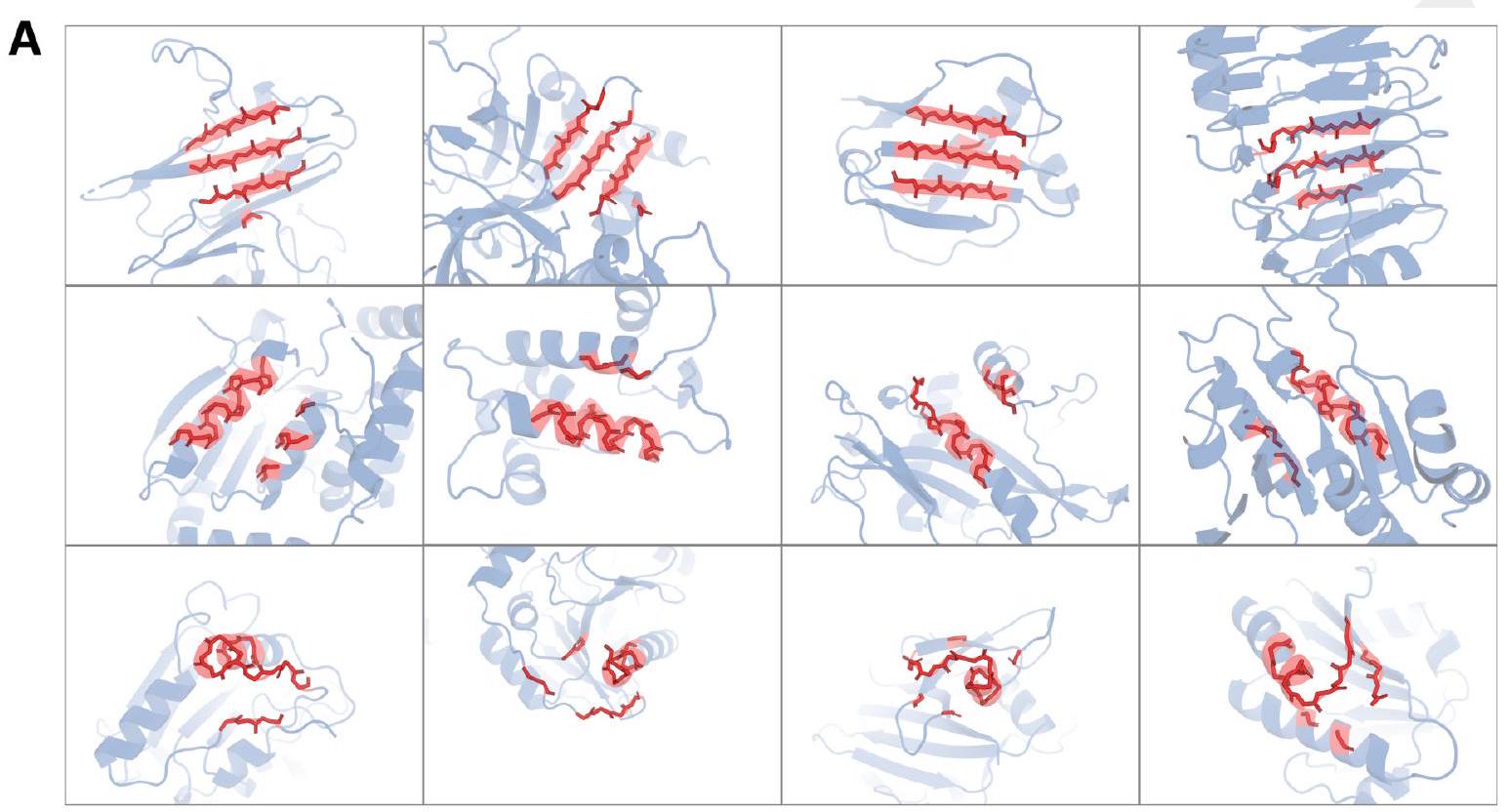

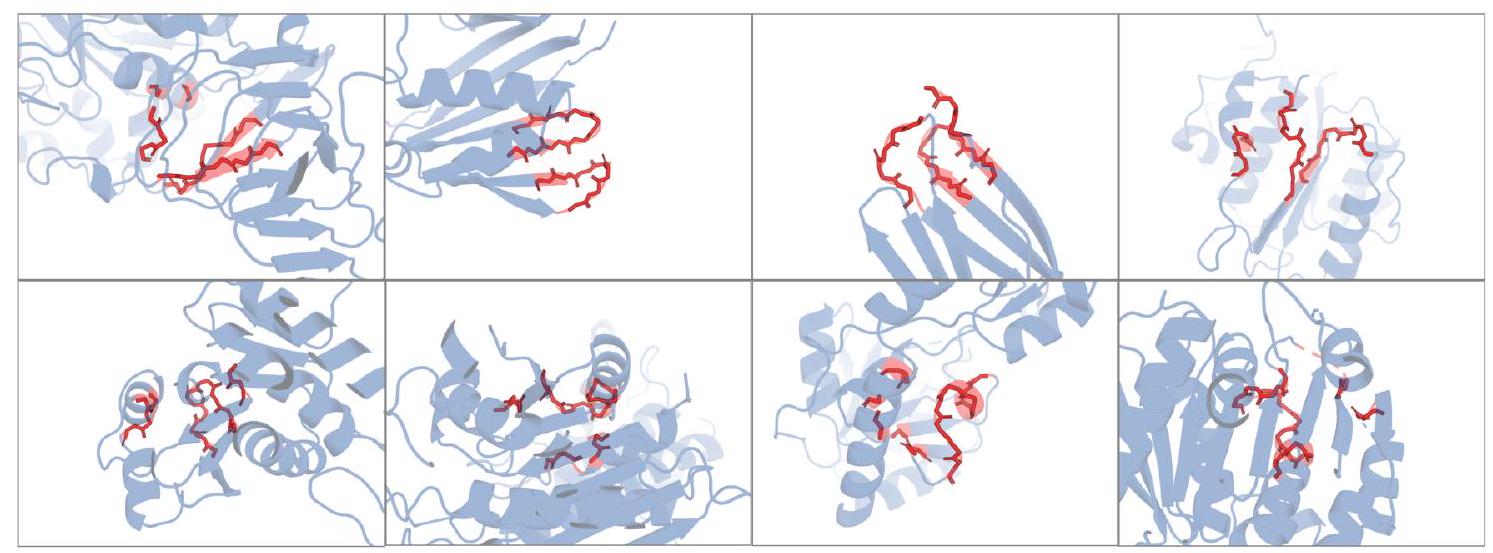

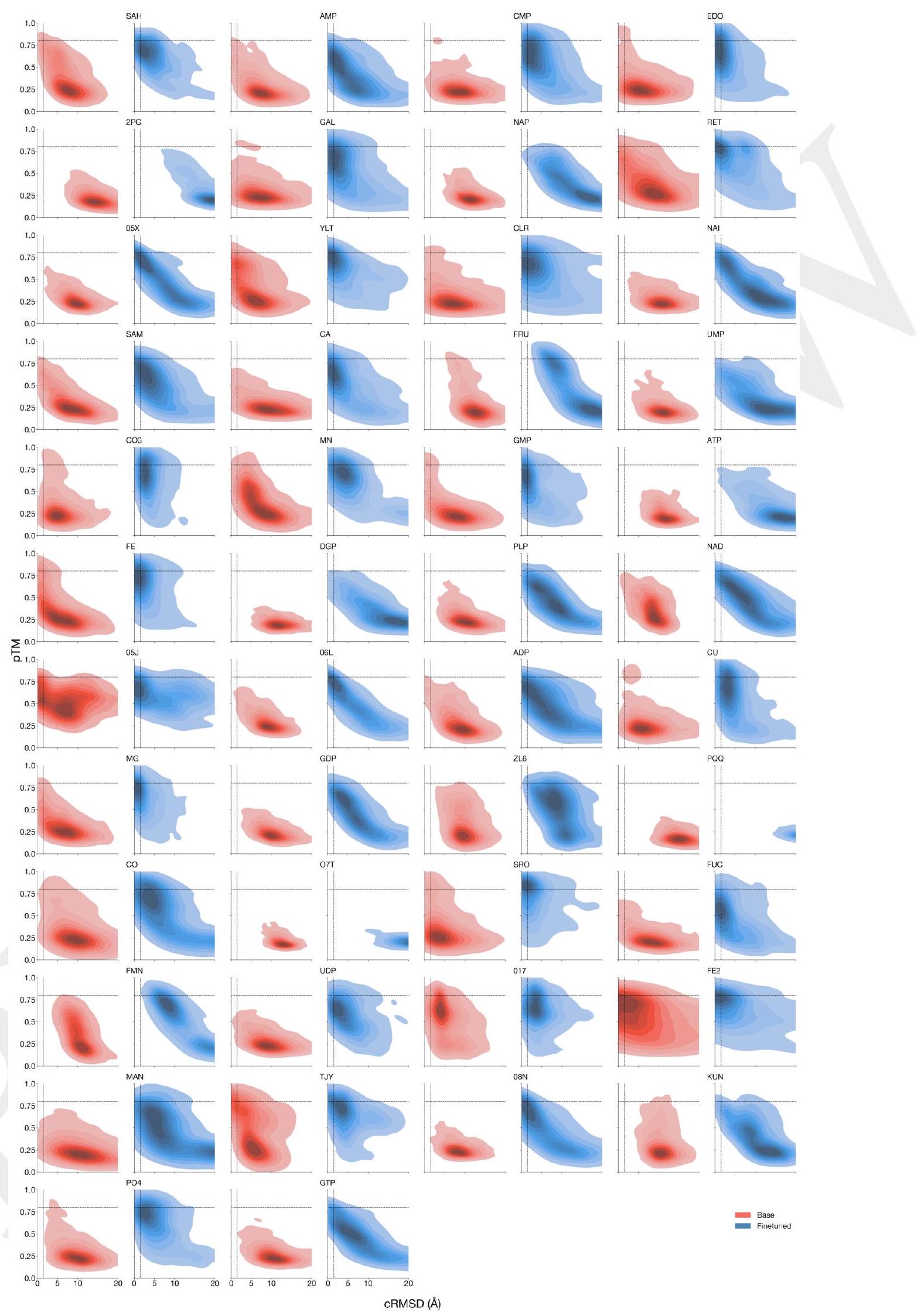

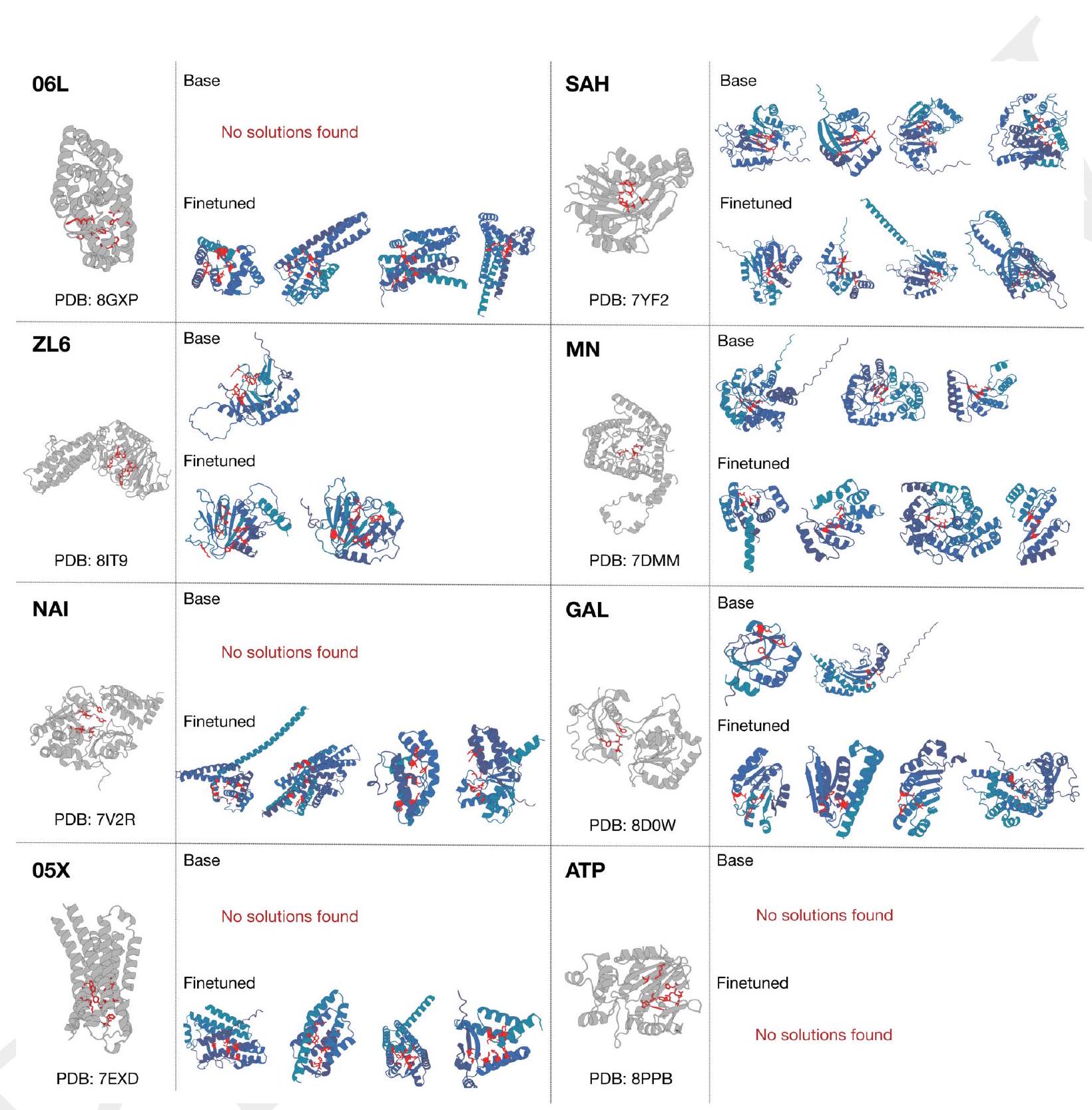

We evaluate ESM3's ability to follow prompts in each of the tracks independently. A set of prompts are constructed for each of the tracks using a temporally held out test set of natural proteins (Appendix A.3.7). We evaluated the resulting generations for consistency with the prompt and foldability, the confidence of the structure prediction TM-score (pTM) under ESMFold. We define consistency metrics for each track: constrained site RMSD (cRMSD) is the RMSD between the prompt coordinates and the corresponding coordinates in the generation; SS3 accuracy is the fraction of residues where three-class secondary structure between the prompt and generations match; SASA spearman $\rho$ is the correlation between the SASA prompt and the corresponding region of the generation; keyword recovery is the fraction of prompt keywords recovered by InterProScan (38). Across all tracks, ESM3 finds solutions that follow the prompt, and have confidently predicted structures by ESMFold (pTM $>0.8$ ) (Fig. 2A). Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - Evaluation of ESM3's ability to follow prompts is conducted for multiple tracks independently.

AI: This summary describes a systematic evaluation of ESM3, a presumably advanced model trained to generate protein structures that are consistent with specific constraints or prompts given to it. The evaluation process aims to assess how well ESM3 can generate protein structures that not only follow the instructions provided in various prompts but also result in viable, foldable proteins as predicted by ESMFold, a tool for predicting the confidence of protein structure predictions.

The methodology for this evaluation involved crafting a series of prompts divided into different tracks, each focusing on distinct aspects or requirements of protein structure modeling. These prompts were based on a dataset of natural proteins, specifically chosen from data that was not used during the model's training phase to ensure an unbiased assessment of its generative abilities.

Key metrics were defined to quantitatively measure the model's performance in adhering to the prompts and generating structurally viable proteins, namely:

The findings indicate that across all tracks, ESM3 was able to generate protein structures that not only adhered to the detailed instructions provided in the prompts but also were deemed foldable with high confidence (pTM score greater than 0.8) by ESMFold. Figure 2A in the context presumably illustrates these results, offering visual or quantitative evidence of ESM3's proficiency in creating accurate and reliable protein structures based on specified criteria. This highlights ESM3's potential as a powerful tool in protein design and modeling, allowing for the custom generation of proteins with desired structures and functions.

ME: please explain it for an expert in LLMs and AI

AI: The evaluation of ESM3's capabilities in generating natural protein structures from specified prompts showcases a significant advancement in the field of artificial intelligence (AI), specifically within the domain of language models and their application in structural biology. ESM3 appears to be a state-of-the-art iteration of generative AI models, tailored for the complex task of protein generation, exhibiting an interdisciplinary leap where natural language processing methodologies are adapted to understand and predict the folding and structural intricacies of proteins.

The evaluation methodology detailed leverages a diverse set of metrics, each meticulously designed to assess the fidelity of ESM3's generated outputs against a series of highly detailed and nuanced prompts. These metrics are:

Constrained Site RMSD (cRMSD): Serving as a precise spatial accuracy metric, cRMSD evaluates how closely the model-generated structures match the specified atomic coordinates in the prompts. This is crucial in applications where specific atomic arrangements are essential for the biological function or stability of the protein.

SS3 Accuracy: This metric aligns with traditional classification tasks in AI but is applied here to the prediction of secondary structures (helices, sheets, coils), assessing the model's ability to understand and recreate the local structural motifs of proteins based on textual or encoded prompts.

SASA Spearman ρ: Reflecting a regression-based correlation measurement, this evaluates the model's proficiency in capturing and replicating the solvent accessibility of amino acids as specified in the prompts. Solvent accessibility is a key factor influencing protein stability and interaction capabilities, making this metric indicative of the model's understanding of protein dynamics and functional properties.

Keyword Recovery: This metric examines the model's ability to interpret and incorporate functional or structural keywords from the prompts into the generated protein structures. Utilizing InterProScan for validation, this metric bridges the gap between textual description and functional/structural protein annotation, akin to evaluating a language model's understanding of context and its ability to generate relevant content based on that context.

The findings, particularly the achievement of confidently predicted structures (pTM > 0.8), not only underscore ESM3's proficiency in generating biologically plausible protein structures but also highlight the model's understanding of complex structural biology concepts distilled into prompt-based instructions. This implies a significant abstraction capability of the model, where it not only interprets but effectively applies sophisticated biological principles to generate functional protein structures.

Moreover, the successful application of ESM3 in this domain represents a promising amalgamation of AI, specifically LLMs, into computational biology and structural bioinformatics. It reflects the growing trend of utilizing AI's pattern recognition and generative capabilities to tackle challenges in scientific domains that are traditionally considered beyond the scope of existing AI methodologies. This not only expands the utility of LLMs into new areas but also opens avenues for innovative approaches to protein design, with potential implications for drug discovery, enzyme design, and understanding disease mechanisms at the molecular level.

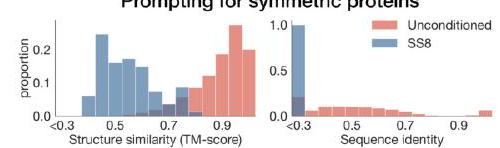

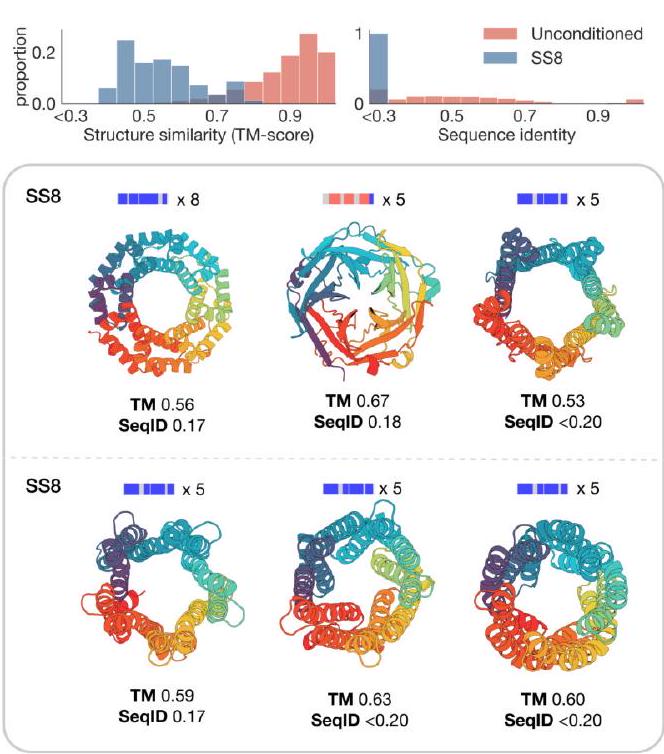

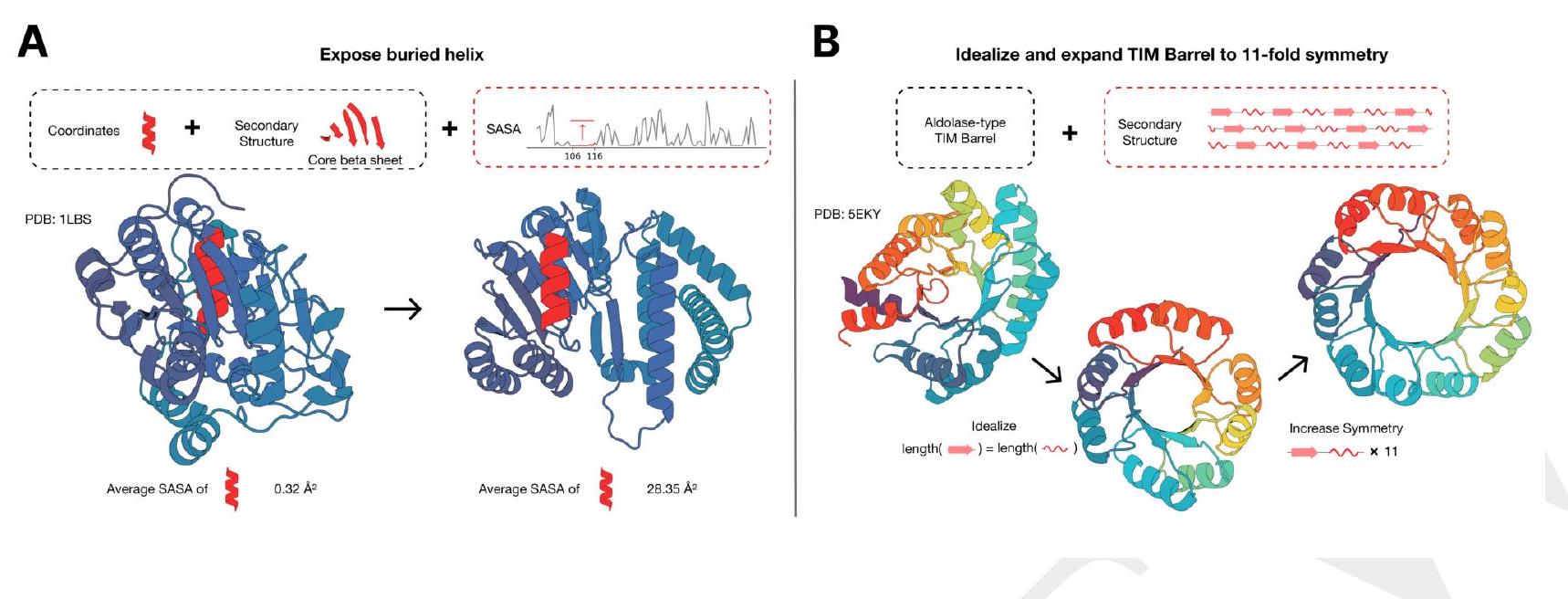

Unconditional generations reflect the distribution of natural proteins. Since we observed ESM3 can faithfully follow prompts, we reasoned that prompting could steer the model to generate proteins that differ from natural proteins. First we test the ability of the model to follow out-of-distribution prompts. We construct a set of prompts combining SS8 and SASA from held out structures (TM $<0.7$ to training set). Under these prompts, while the model continues to generate coherent globular structures (mean pTM $0.85 \pm 0.03$ ), the distribution of similarities to the training set (as measured by TM-score and sequence identity) shifts to be more novel (average sequence identity to nearest training set protein $<20 \%$ and mean TM-score $0.48 \pm 0.09$; Fig. 2B top). To test the ability to generalize to structures beyond the distribution of natural proteins, we use secondary structure prompts derived from a dataset of artificial symmetric protein designs distinct from the natural proteins found in the training dataset (Appendix A.3.8). Similarly, ESM3 produces high confidence generations (pTM $>0.8$, pLDDT $>0.8$ ) with low sequence and structure similarity to proteins in the training set (sequence identity $<20 \%$ and TM-score $0.52 \pm 0.10$; Fig. 2B bottom), indicating that the model can be used to generate protein sequences and structures highly distinct from those that exist in nature. Please analyze this paragraph from the point of view of an expert scientist and be critical of any omissions or possible sources of problems you see. Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: Analyzing the paragraph from the perspective of an expert scientist, while the paragraph provides valuable insights into the capabilities of the model (ESM3) in generating novel protein structures, there are inherent criticisms and potential problems not addressed. Below, the key facts and ideas are extracted and outlined in markdown list format. Subsequently, I will highlight potential omissions and sources of problems.

Addressing these omissions and potential problem areas would provide a more comprehensive understanding of the model's efficacy, limitations, and potential impact on protein engineering and synthetic biology endeavors.

AI: The paragraph presents intriguing advancements in the ability of a deep learning model, referred to as ESM3, to generate protein structures. The focus here is on ESM3's ability to respond to prompts and generate proteins both within and beyond the distribution of natural proteins. I'll dissect the paragraph from a critical scientific standpoint, underlining significant insights while also highlighting potential areas of concern or omission.

Out-of-Distribution Generalization: The paragraph underscores ESM3's promising performance in generating coherent globular structures from out-of-distribution prompts, which is a significant milestone in protein design. The ability to steer away from the distribution of known proteins and still maintain structural integrity in the generated proteins is notable.

High Confidence in Novel Generations: Both sets of experiments show that ESM3 can create structures with high confidence (high pTM and pLDDT scores), even when these generations have low similarity to known proteins. This is crucial for novel protein design, potentially paving the way for breakthroughs in creating proteins with unprecedented functionalities.

Evaluation of Functional Viability: While the focus on structural metrics like pTM, pLDDT, and sequence identity is important, the paragraph does not address the functional viability or stability of these generated proteins. Structural coherence does not guarantee functional efficacy, and additional analyses (e.g., molecular dynamics simulations, experimental validation) are necessary to ascertain that these novel proteins can be viably expressed and are functionally active.

Generalization beyond Globular Proteins: The reported experiments centered on globular proteins. However, proteins encompass a wider range of structures, including fibrous and membrane proteins. The model's ability to generalize across different types of proteins, which may have distinct folding patterns and functional roles, remains unaddressed.

Prompt Dependency and Limitations: The paragraph illustrates ESM3's ability to follow prompts effectively but does not discuss the limitations of this approach. For instance, the design of prompts requires substantial understanding of protein structures and might be limiting if the prompts do not accurately reflect feasible structural domains or functional sites.

Dataset Bias and Diversity: Artificial symmetric protein designs are distinct from natural protein databases, facilitating novel protein generation. However, the diversity and representativeness of these artificial designs need scrutiny. Biases in the artificial designs or their insufficient diversity could limit the generalization capabilities of ESM3, skewing toward certain structural motifs or topologies.

Scalability and Computational Costs: Implementing deep learning models for protein generation, especially for out-of-distribution proteins, likely incurs high computational costs. The scalability of ESM3's approach to larger datasets or more complex protein designs is not mentioned, which could be a significant constraint in practical applications.

Ethical and Biosafety Considerations: Lastly, generating proteins beyond the natural distribution raises ethical and biosafety concerns that are not addressed. The potential for creating harmful proteins, either unintentionally or through misuse, necessitates a broader discussion on the control measures and ethical guidelines governing the use of such technologies.

The paragraph presents compelling progress in the field of protein design using deep learning, showcasing ESM3's ability to generate novel protein structures effectively. Despite these advances, there remain critical areas for improvement and consideration, spanning functional validation, model generalization capabilities, application range, and ethical implications. Addressing these aspects would be crucial for translating technological advances into safe and practical applications.

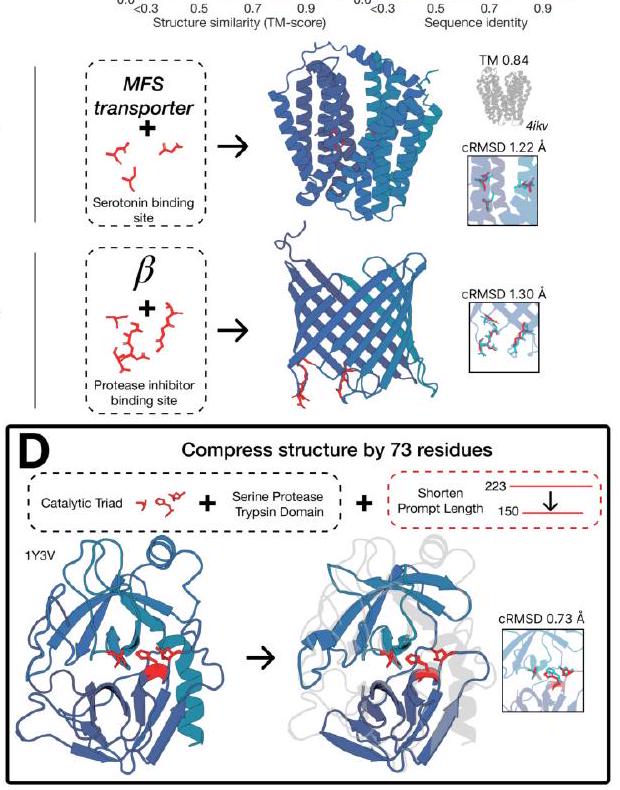

ESM3 is able to follow complex prompts, and has the ability to compose prompts from different tracks, and at different levels of abstraction. To evaluate this ability, we prompt ESM3 with motifs that require the model to solve for spatial coordination of individual atoms, including ones requiring tertiary coordination between residues far apart in the sequence, such as catalytic centers and ligand binding sites. Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: - ESM3 can follow complex prompts.

Please explain how this is breakthrough

AI: The aspect of ESM3 (which stands in this context for a hypothetical or future version of an Evolutionary Scale Modeling system, third iteration) that allows it to follow complex prompts and solve for spatial coordination of individual atoms, including tertiary coordination between residues far apart in the sequence, represents a significant breakthrough in computational biology for several reasons:

Understanding Complex Protein Structures: Protein functions are determined by their three-dimensional structures. The ability to accurately model and predict tertiary and quaternary structures, including the precise spatial coordination of atoms and the folding patterns of amino acid chains, is crucial for understanding how proteins work. This breakthrough implies a level of detail and accuracy that was previously difficult or impossible to achieve, especially for proteins with complex folding patterns and those that require precise coordination of distant residues for their function.

Accelerating Drug Discovery: Knowing the exact arrangement of atoms within catalytic centers and ligand binding sites can dramatically accelerate drug discovery and design. By accurately predicting how proteins fold and where key binding sites are located, researchers can more efficiently design or identify compounds that can specifically interact with these sites to modulate protein function. This can lead to the development of new drugs with higher efficacy and fewer side effects.

Multiscale Modeling: The ability to compose prompts at different levels of abstraction indicates a versatile tool capable of integrating information across different biological scales, from the atomistic to the macromolecular. This multiscale modeling is crucial for understanding how changes at the atomic level (such as mutations that alter a single amino acid) can affect the overall structure and function of a protein, and by extension, impact cellular processes and organismal health.

Overcoming Sequence Distance Constraint: Traditional methods often struggle with residues that are far apart in the linear sequence but come close together in the folded structure to form functional sites. The ability to model tertiary coordination between such residues is a significant step forward, enabling the prediction of protein structures and interactions that were previously challenging due to the limitations in understanding long-range interactions.

Applications Beyond Protein Folding: While the immediate application is in protein structure prediction and analysis, the capabilities of ESM3 extend to other areas such as enzyme design, understanding disease mechanisms at the molecular level (e.g., how mutations disrupt normal protein function), and synthetic biology. This opens up new possibilities for the biotechnology and pharmaceutical industries, among others.

Technological Innovation: To achieve this level of detail and complexity in predictions, significant innovations in machine learning, data handling, and computational power are required. This denotes not just a breakthrough in understanding biological systems but also in the technological and algorithmic approaches used to model such systems.

In summary, ESM3's ability to accurately model the spatial coordination of atoms within proteins, particularly for complexes and distant residues, signifies a leap forward in computational biology and protein science. It enhances our ability to understand life at a molecular level and holds promise for numerous practical applications in medicine, biotechnology, and related fields.

A

Brompting for out-of-distribution folds

Figure 2. Generative programming with ESM3. (A) ESM3 can follow prompts from each of its input tracks. Density of faithfulness to prompting for each of the tracks is shown. Generations achieve consistency with the prompt and high foldability (pTM). (B) ESM3 can be prompted to generate proteins that differ in structure (left) and sequence (right) from natural proteins. Prompted generations (blue) shift toward a more novel space vs. unconditional generations (red), in response to prompts derived from out-of-distribution natural structures (upper panel) and computationally designed symmetric proteins (lower panel). (C) ESM3 generates creative solutions to a variety of combinations of complex prompts. We show compositions of atomic level motifs with high level instructions specified through keyword or secondary structure. Fidelity to the prompt is shown via similarity to reference structure (for keyword prompts) and all-atom RMSD to the prompted structure (for atomic coordination prompts). Solutions differ from the scaffolds where the motif was derived (median TM-score $0.36 \pm 0.14$ ), and for many motifs (e.g. serotonin, calcium, protease inhibitor, and Mcl-1 inhibitor binding sites), we could find no significant similarity to other proteins that contain the same motif. (D) An example of especially creative behavior. ESM3 compresses a serine protease by $33 \%$ while maintaining the active site structure. Please analyze this paragraph from the point of view of an expert scientist and be critical of any omissions or possible sources of problems you see. Please extract each individual fact or idea from this paragraph and display in markdown list format

AI: Analyzing the paragraph critically reveals several interesting facts and ideas about the capabilities of ESM3, but also raises questions regarding omissions or areas where challenges might occur. Below is a deeper dive into each fact or idea extracted from the paragraph:

In summary, while the abilities of ESM3 as described offer promising directions for protein engineering and synthetic biology, the analysis could benefit from a more nuanced discussion on functional validation, real-world applicability, computation limitations, and broader implications of creating novel proteins

AI: Analyzing the provided text critically from an expert scientist's perspective, several points merit attention regarding omissions and potential sources of problems. The paragraph effectively communicates impressive capabilities of ESM3 in generative programming for protein design, yet some aspects could benefit from further specificity or clarification.

Input Tracks and Prompting Details: While it mentions that ESM3 can follow prompts from its input tracks, the paragraph does not specify what these input tracks entail or how they are designed. The methodology behind the construction of these prompts and the specification of "density of faithfulness" could be more transparent. This information is crucial for reproducibility and understanding how ESM3 interprets and prioritizes various prompts.

Benchmarking and Measurements: The paragraph references measurements like high foldability (pTM), similarity to reference structure, and all-atom RMSD without offering a clear definition or the rationale behind choosing these specific metrics. Furthermore, the context of what values are considered high or low for these metrics in the field of protein design is missing.

Benchmark Against Current Standards: There is a lack of direct comparison to existing generative models or protein design algorithms. While the novelty is implied through the generation of proteins differing in structure and sequence from natural proteins, a more nuanced discussion on how ESM3 improves or differs from current methods could strengthen its stated contributions.

Representation of Novel Space: The paragraph indicates ESM3's ability to generate proteins that shift toward a "more novel space" but does not elaborate on how this novelty is quantified or its implications for practical applications, such as drug design or biotechnology. A more in-depth examination of the practical utility of these novel proteins would be beneficial.